Interpreting Deep Learning Model Using Rule-based Method

Paper and Code

Oct 15, 2020

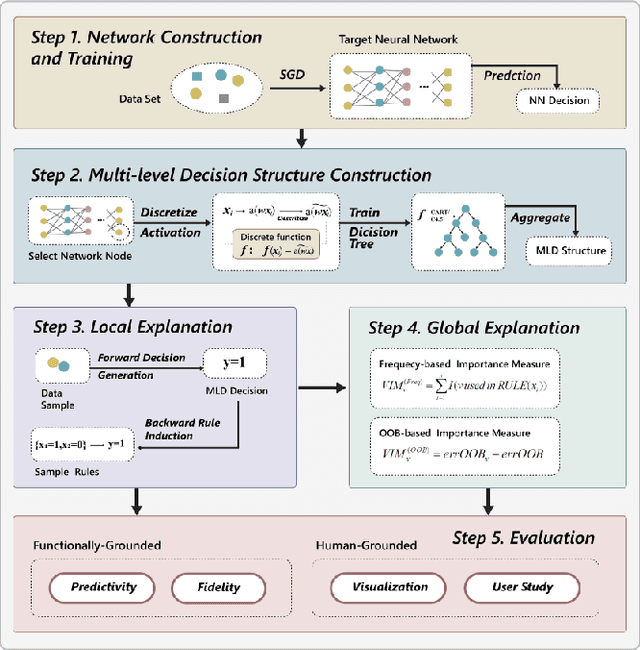

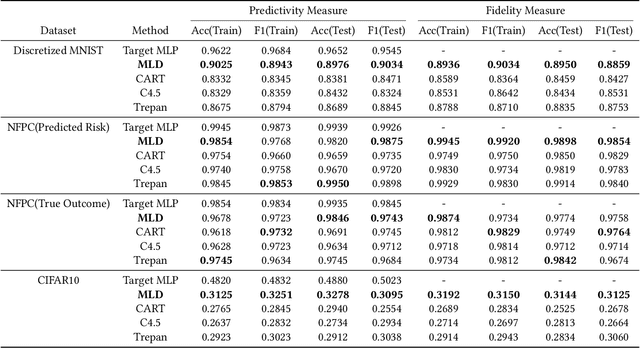

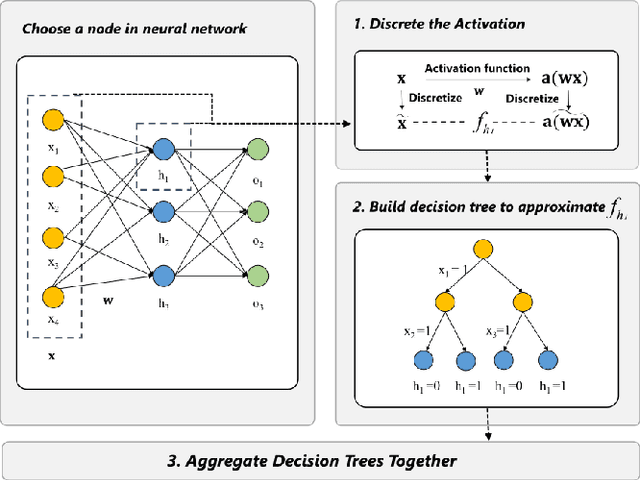

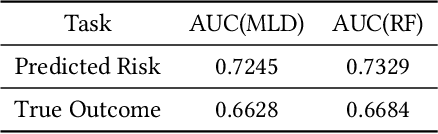

Deep learning models are favored in many research and industry areas and have reached the accuracy of approximating or even surpassing human level. However they've long been considered by researchers as black-box models for their complicated nonlinear property. In this paper, we propose a multi-level decision framework to provide comprehensive interpretation for the deep neural network model. In this multi-level decision framework, by fitting decision trees for each neuron and aggregate them together, a multi-level decision structure (MLD) is constructed at first, which can approximate the performance of the target neural network model with high efficiency and high fidelity. In terms of local explanation for sample, two algorithms are proposed based on MLD structure: forward decision generation algorithm for providing sample decisions, and backward rule induction algorithm for extracting sample rule-mapping recursively. For global explanation, frequency-based and out-of-bag based methods are proposed to extract important features in the neural network decision. Furthermore, experiments on the MNIST and National Free Pre-Pregnancy Check-up (NFPC) dataset are carried out to demonstrate the effectiveness and interpretability of MLD framework. In the evaluation process, both functionally-grounded and human-grounded methods are used to ensure credibility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge