Internet of Things Applications: Animal Monitoring with Unmanned Aerial Vehicle

Paper and Code

Oct 20, 2016

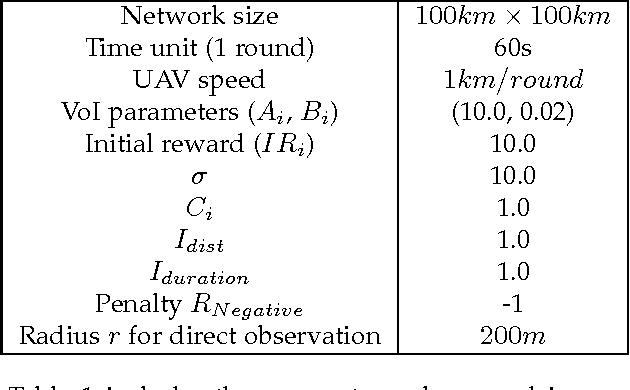

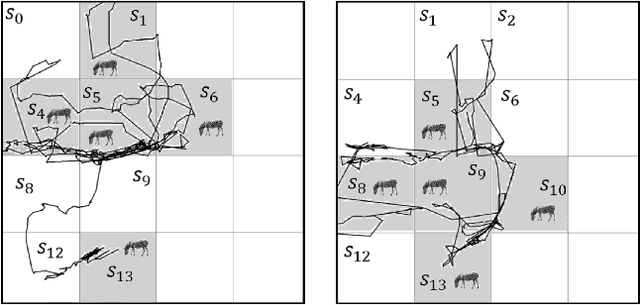

In animal monitoring applications, both animal detection and their movement prediction are major tasks. While a variety of animal monitoring strategies exist, most of them rely on mounting devices. However, in real world, it is difficult to find these animals and install mounting devices. In this paper, we propose an animal monitoring application by utilizing wireless sensor networks (WSNs) and unmanned aerial vehicle (UAV). The objective of the application is to detect locations of endangered species in large-scale wildlife areas and monitor movement of animals without any attached devices. In this application, sensors deployed throughout the observation area are responsible for gathering animal information. The UAV flies above the observation area and collects the information from sensors. To achieve the information efficiently, we propose a path planning approach for the UAV based on a Markov decision process (MDP) model. The UAV receives a certain amount of reward from an area if some animals are detected at that location. We solve the MDP using Q-learning such that the UAV prefers going to those areas that animals are detected before. Meanwhile, the UAV explores other areas as well to cover the entire network and detects changes in the animal positions. We first define the mathematical model underlying the animal monitoring problem in terms of the value of information (VoI) and rewards. We propose a network model including clusters of sensor nodes and a single UAV that acts as a mobile sink and visits the clusters. Then, one MDP-based path planning approach is designed to maximize the VoI while reducing message delays. The effectiveness of the proposed approach is evaluated using two real-world movement datasets of zebras and leopard. Simulation results show that our approach outperforms greedy, random heuristics and the path planning based on the traveling salesman problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge