Information-Theoretic Measures on Lattices for High-Order Interactions

Paper and Code

Aug 14, 2024

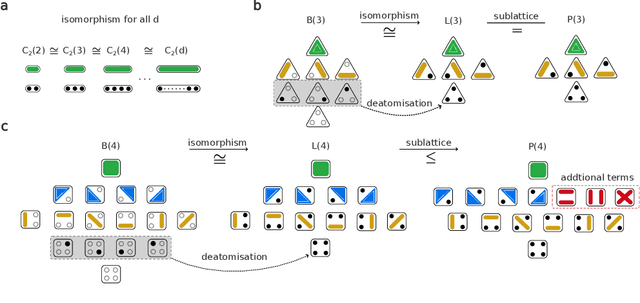

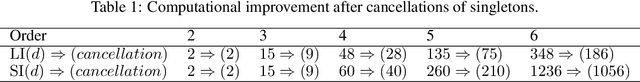

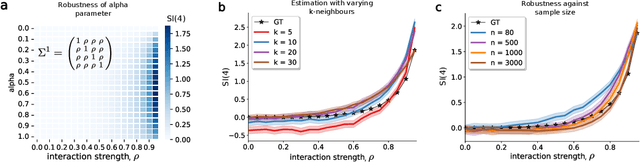

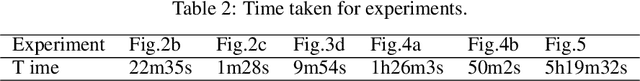

Traditional models reliant solely on pairwise associations often prove insufficient in capturing the complex statistical structure inherent in multivariate data. Yet existing methods for identifying information shared among groups of $d>3$ variables are often intractable; asymmetric around a target variable; or unable to consider all factorisations of the joint probability distribution. Here, we present a framework that systematically derives high-order measures using lattice and operator function pairs, whereby the lattice captures the algebraic relational structure of the variables and the operator function computes measures over the lattice. We show that many existing information-theoretic high-order measures can be derived by using divergences as operator functions on sublattices of the partition lattice, thus preventing the accurate quantification of all interactions for $d>3$. Similarly, we show that using the KL divergence as the operator function also leads to unwanted cancellation of interactions for $d>3$. To characterise all interactions among $d$ variables, we introduce the Streitberg information defined on the full partition lattice using generalisations of the KL divergence as operator functions. We validate our results numerically on synthetic data, and illustrate the use of the Streitberg information through applications to stock market returns and neural electrophysiology data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge