InfoMax-GAN: Improved Adversarial Image Generation via Information Maximization and Contrastive Learning

Paper and Code

Jul 09, 2020

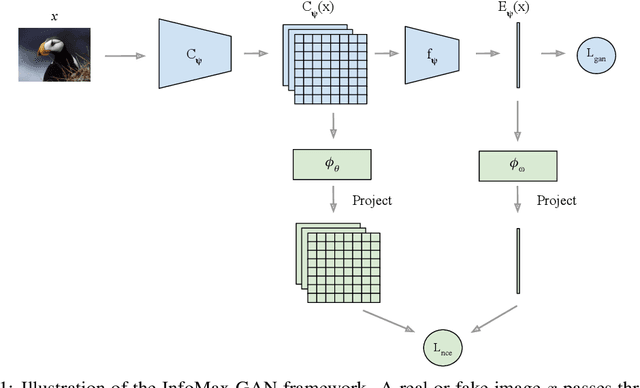

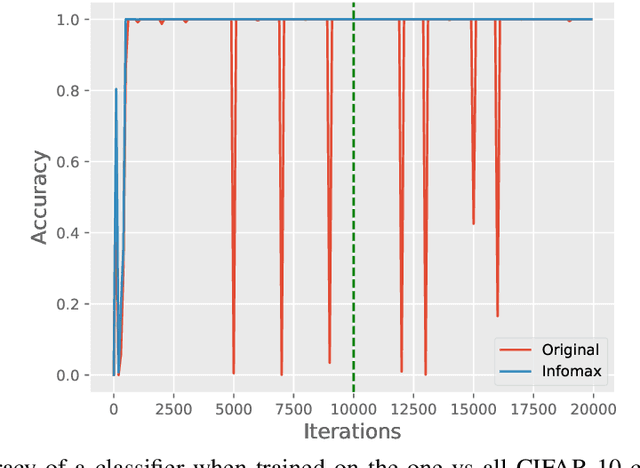

While Generative Adversarial Networks (GANs) are fundamental to many generative modelling applications, they suffer from numerous issues. In this work, we propose a principled framework to simultaneously address two fundamental issues in GANs: catastrophic forgetting of the discriminator and mode collapse of the generator. We achieve this by employing for GANs a contrastive learning and mutual information maximization approach, and perform extensive analyses to understand sources of improvements. Our approach significantly stabilises GAN training and improves GAN performance for image synthesis across five datasets under the same training and evaluation conditions against state-of-the-art works. Our approach is simple to implement and practical: it involves only one objective, is computationally inexpensive, and is robust across a wide range of hyperparameters without any tuning. For reproducibility, our code is available at https://github.com/kwotsin/mimicry.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge