Inductive Kernel Low-rank Decomposition with Priors: A Generalized Nystrom Method

Paper and Code

Jun 18, 2012

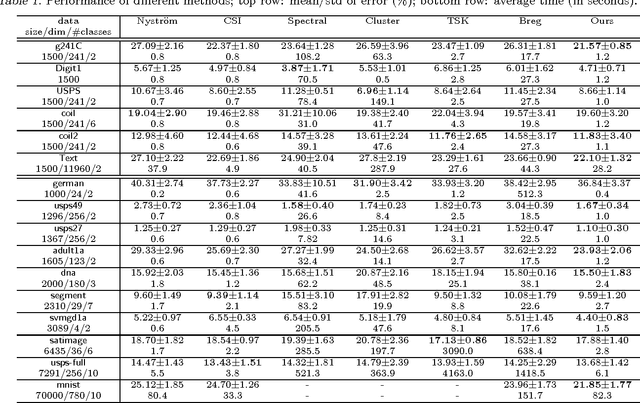

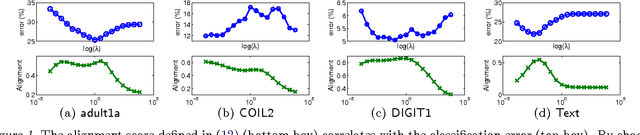

Low-rank matrix decomposition has gained great popularity recently in scaling up kernel methods to large amounts of data. However, some limitations could prevent them from working effectively in certain domains. For example, many existing approaches are intrinsically unsupervised, which does not incorporate side information (e.g., class labels) to produce task specific decompositions; also, they typically work "transductively", i.e., the factorization does not generalize to new samples, so the complete factorization needs to be recomputed when new samples become available. To solve these problems, in this paper we propose an"inductive"-flavored method for low-rank kernel decomposition with priors. We achieve this by generalizing the Nystr\"om method in a novel way. On the one hand, our approach employs a highly flexible, nonparametric structure that allows us to generalize the low-rank factors to arbitrarily new samples; on the other hand, it has linear time and space complexities, which can be orders of magnitudes faster than existing approaches and renders great efficiency in learning a low-rank kernel decomposition. Empirical results demonstrate the efficacy and efficiency of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge