Improving EEG based Continuous Speech Recognition

Paper and Code

Dec 24, 2019

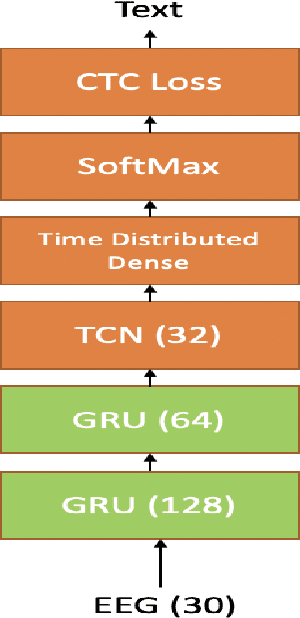

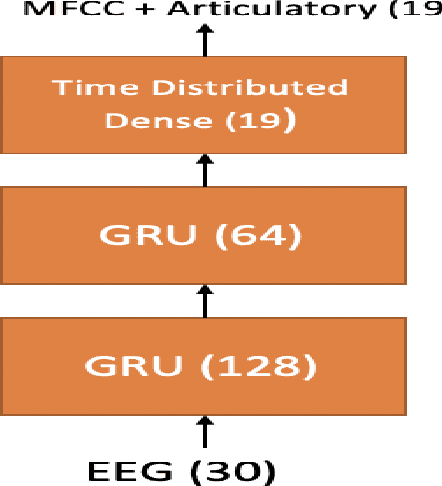

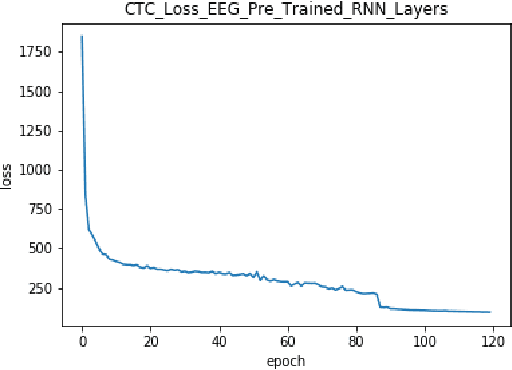

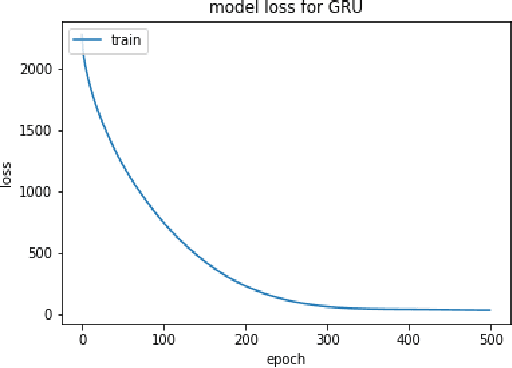

In this paper we introduce various techniques to improve the performance of electroencephalography (EEG) features based continuous speech recognition (CSR) systems. A connectionist temporal classification (CTC) based automatic speech recognition (ASR) system was implemented for performing recognition. We introduce techniques to initialize the weights of the recurrent layers in the encoder of the CTC model with more meaningful weights rather than with random weights and we make use of an external language model to improve the beam search during decoding time. We finally study the problem of predicting articulatory features from EEG features in this paper.

* On preparation for submission to EUSIPCO 2020. arXiv admin note: text

overlap with arXiv:1911.04261, arXiv:1906.08871

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge