Improving Deep Models of Person Re-identification for Cross-Dataset Usage

Paper and Code

Jul 23, 2018

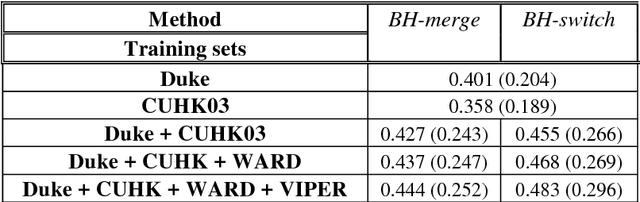

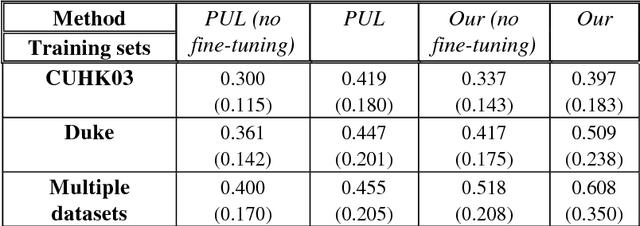

Person re-identification (Re-ID) is the task of matching humans across cameras with non-overlapping views that has important applications in visual surveillance. Like other computer vision tasks, this task has gained much with the utilization of deep learning methods. However, existing solutions based on deep learning are usually trained and tested on samples taken from same datasets, while in practice one need to deploy Re-ID systems for new sets of cameras for which labeled data is unavailable. Here, we mitigate this problem for one state-of-the-art model, namely, metric embedding trained with the use of the triplet loss function, although our results can be extended to other models. The contribution of our work consists in developing a method of training the model on multiple datasets, and a method for its online practically unsupervised fine-tuning. These methods yield up to 19.1% improvement in Rank-1 score in the cross-dataset evaluation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge