Improved Generative Model for Weakly Supervised Chest Anomaly Localization via Pseudo-paired Registration with Bilaterally Symmetrical Data Augmentation

Paper and Code

Jul 21, 2022

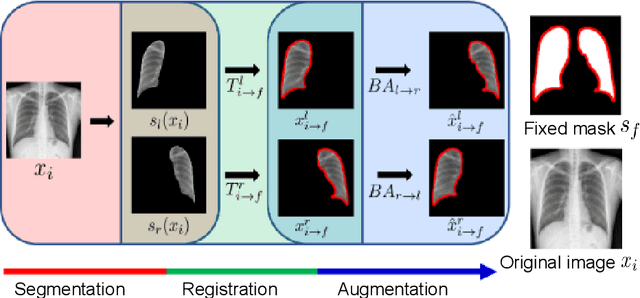

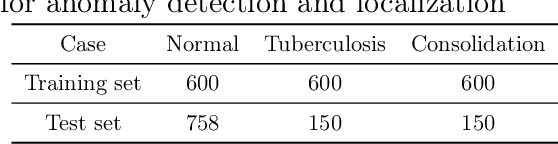

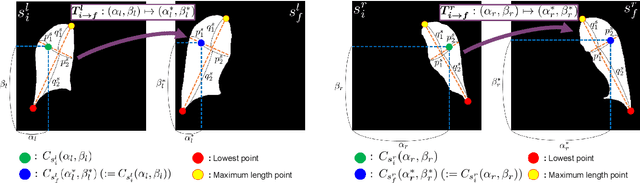

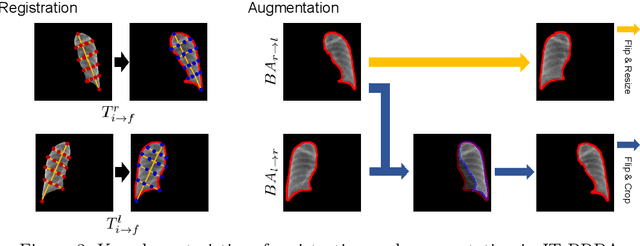

Image translation based on a generative adversarial network (GAN-IT) is a promising method for precise localization of abnormal regions in chest X-ray images (AL-CXR). However, heterogeneous unpaired datasets undermine existing methods to extract key features and distinguish normal from abnormal cases, resulting in inaccurate and unstable AL-CXR. To address this problem, we propose an improved two-stage GAN-IT involving registration and data augmentation. For the first stage, we introduce an invertible deep-learning-based registration technique that virtually and reasonably converts unpaired data into paired data for learning registration maps. This novel approach achieves high registration performance. For the second stage, we apply data augmentation to diversify anomaly locations by swapping the left and right lung regions on the uniform registered frames, further improving the performance by alleviating imbalance in data distribution showing left and right lung lesions. Our method is intended for application to existing GAN-IT models, allowing existing architecture to benefit from key features for translation. By showing that the AL-CXR performance is uniformly improved when applying the proposed method, we believe that GAN-IT for AL-CXR can be deployed in clinical environments, even if learning data are scarce.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge