Improved EEG Classification by factoring in sensor topography

Paper and Code

May 22, 2019

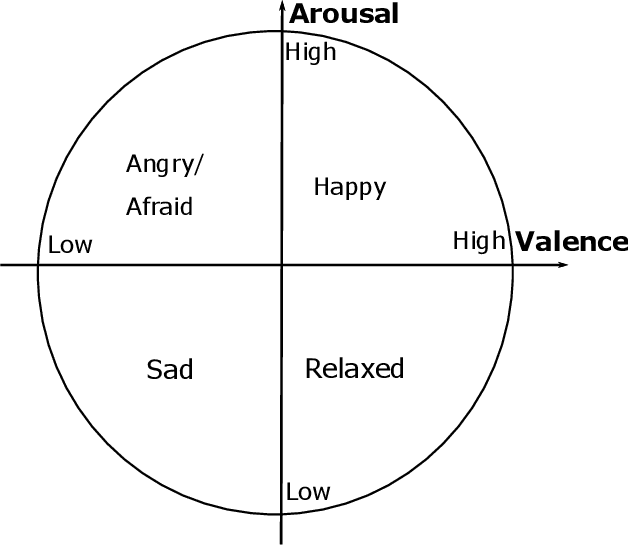

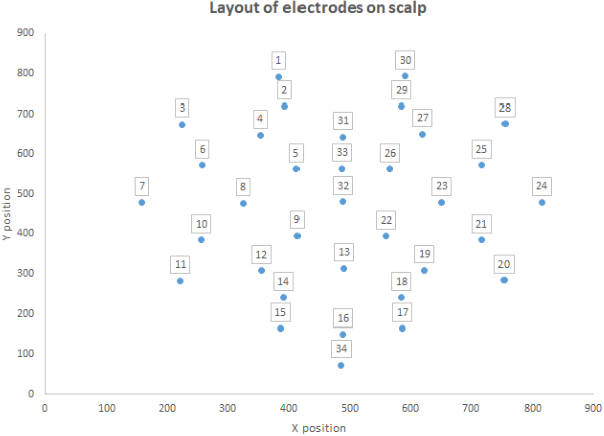

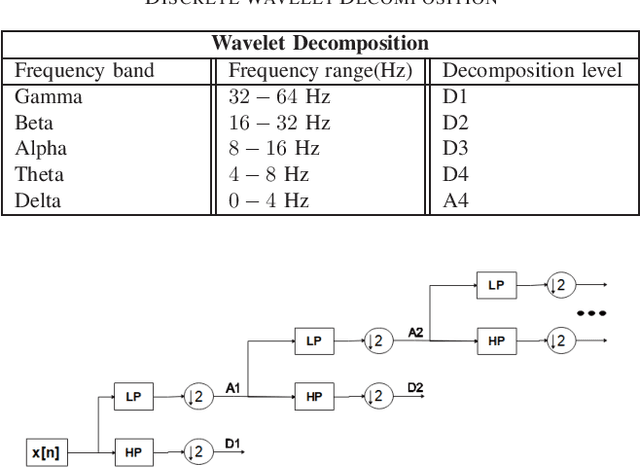

Electroencephalography (EEG) serves as an effective diagnostic tool for mental disorders and neurological abnormalities. Enhanced analysis and classification of EEG signals can help improve detection performance. This work presents a new approach that seeks to exploit the knowledge of EEG sensor spatial configuration to achieve higher detection accuracy. Two classification models, one which ignores the configuration (model 1) and one that exploits it with different interpolation methods (model 2), are studied. The analysis is based on the information content of these signals represented in two different ways: concatenation of the channels of the frequency bands and an image-like 2D representation of the EEG channel locations. Performance of these models is examined on two tasks, social anxiety disorder (SAD) detection, and emotion recognition using DEAP dataset. Validity of our hypothesis that model 2 will significantly outperform model 1 is borne out in the results, with accuracy $5$--$8\%$ higher for model 2 for each machine learning algorithm we investigated. Convolutional Neural Networks (CNN) were found to provide much better performance than SVM and kNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge