Implicit Label Augmentation on Partially Annotated Clips via Temporally-Adaptive Features Learning

Paper and Code

May 24, 2019

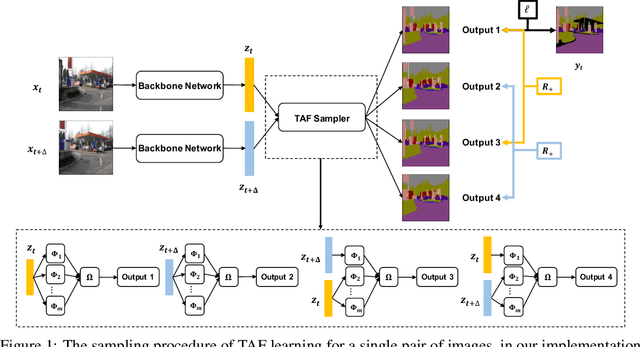

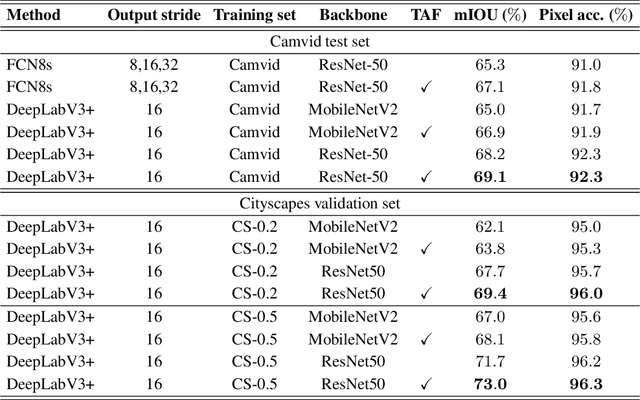

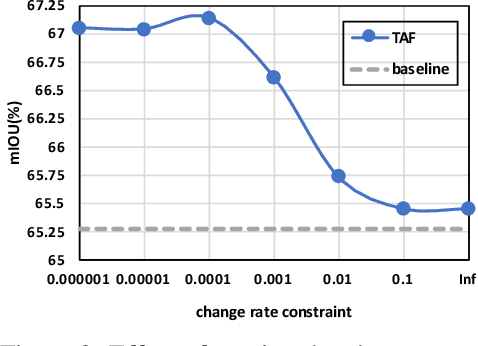

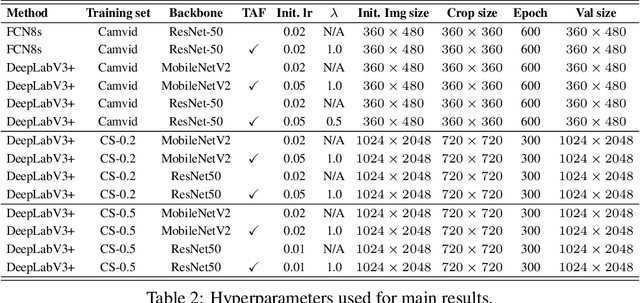

Partially annotated clips contain rich temporal contexts that can complement the sparse key frame annotations in providing supervision for model training. We present a novel paradigm called Temporally-Adaptive Features (TAF) learning that can utilize such data to learn better single frame models. By imposing distinct temporal change rate constraints on different factors in the model, TAF enables learning from unlabeled frames using context to enhance model accuracy. TAF generalizes "slow feature" learning and we present much stronger empirical evidence than prior works, showing convincing gains for the challenging semantic segmentation task over a variety of architecture designs and on two popular datasets. TAF can be interpreted as an implicit label augmentation method but is a more principled formulation compared to existing explicit augmentation techniques. Our work thus connects two promising methods that utilize partially annotated clips for single frame model training and can inspire future explorations in this direction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge