Implicit assessment of language learning during practice as accurate as explicit testing

Paper and Code

Sep 24, 2024

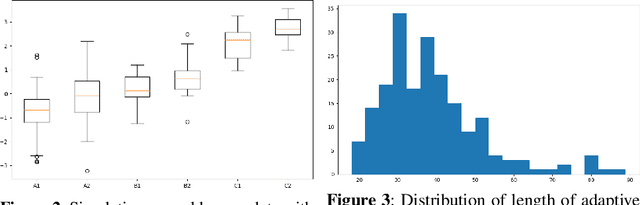

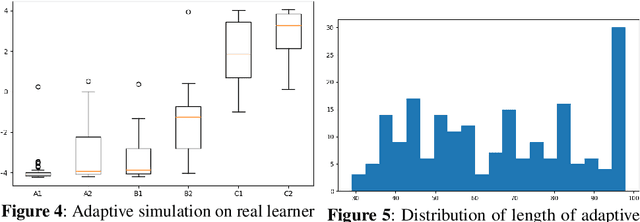

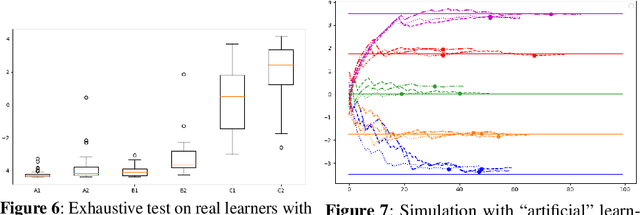

Assessment of proficiency of the learner is an essential part of Intelligent Tutoring Systems (ITS). We use Item Response Theory (IRT) in computer-aided language learning for assessment of student ability in two contexts: in test sessions, and in exercises during practice sessions. Exhaustive testing across a wide range of skills can provide a detailed picture of proficiency, but may be undesirable for a number of reasons. Therefore, we first aim to replace exhaustive tests with efficient but accurate adaptive tests. We use learner data collected from exhaustive tests under imperfect conditions, to train an IRT model to guide adaptive tests. Simulations and experiments with real learner data confirm that this approach is efficient and accurate. Second, we explore whether we can accurately estimate learner ability directly from the context of practice with exercises, without testing. We transform learner data collected from exercise sessions into a form that can be used for IRT modeling. This is done by linking the exercises to {\em linguistic constructs}; the constructs are then treated as "items" within IRT. We present results from large-scale studies with thousands of learners. Using teacher assessments of student ability as "ground truth," we compare the estimates obtained from tests vs. those from exercises. The experiments confirm that the IRT models can produce accurate ability estimation based on exercises.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge