ILASH: A Predictive Neural Architecture Search Framework for Multi-Task Applications

Paper and Code

Dec 03, 2024

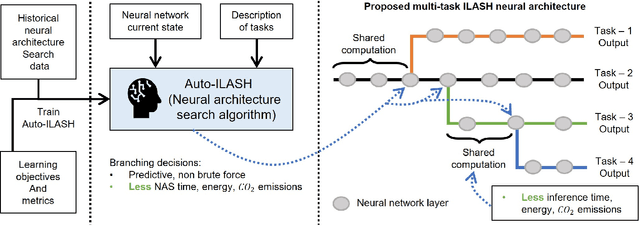

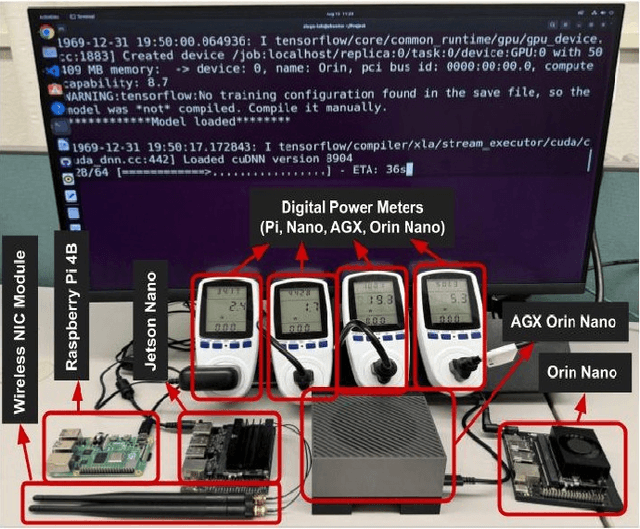

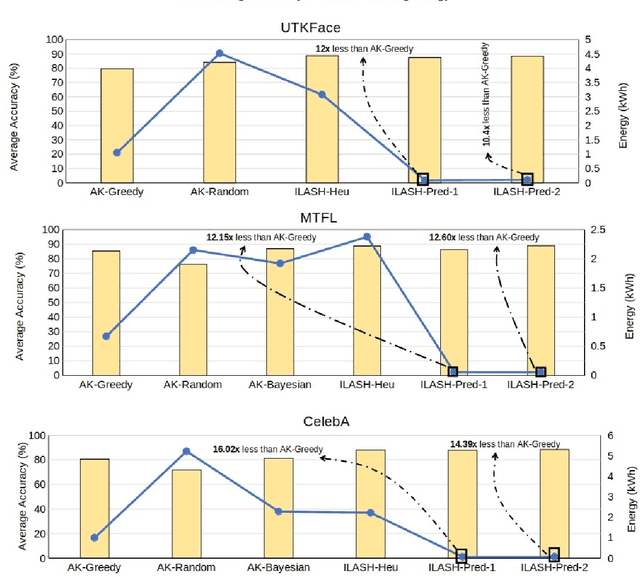

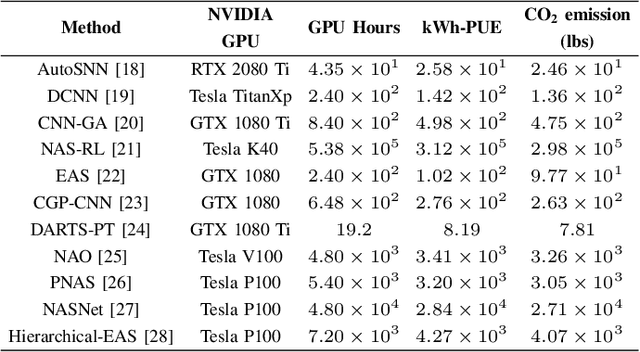

Artificial intelligence (AI) is widely used in various fields including healthcare, autonomous vehicles, robotics, traffic monitoring, and agriculture. Many modern AI applications in these fields are multi-tasking in nature (i.e. perform multiple analysis on same data) and are deployed on resource-constrained edge devices requiring the AI models to be efficient across different metrics such as power, frame rate, and size. For these specific use-cases, in this work, we propose a new paradigm of neural network architecture (ILASH) that leverages a layer sharing concept for minimizing power utilization, increasing frame rate, and reducing model size. Additionally, we propose a novel neural network architecture search framework (ILASH-NAS) for efficient construction of these neural network models for a given set of tasks and device constraints. The proposed NAS framework utilizes a data-driven intelligent approach to make the search efficient in terms of energy, time, and CO2 emission. We perform extensive evaluations of the proposed layer shared architecture paradigm (ILASH) and the ILASH-NAS framework using four open-source datasets (UTKFace, MTFL, CelebA, and Taskonomy). We compare ILASH-NAS with AutoKeras and observe significant improvement in terms of both the generated model performance and neural search efficiency with up to 16x less energy utilization, CO2 emission, and training/search time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge