iDF-SLAM: End-to-End RGB-D SLAM with Neural Implicit Mapping and Deep Feature Tracking

Paper and Code

Sep 16, 2022

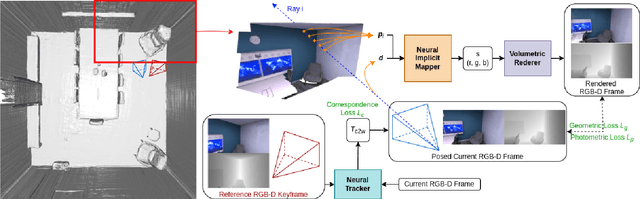

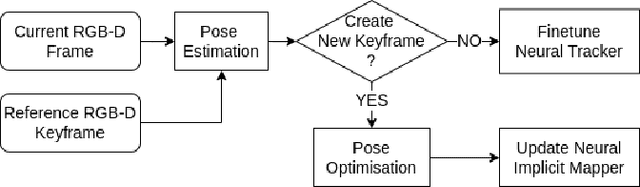

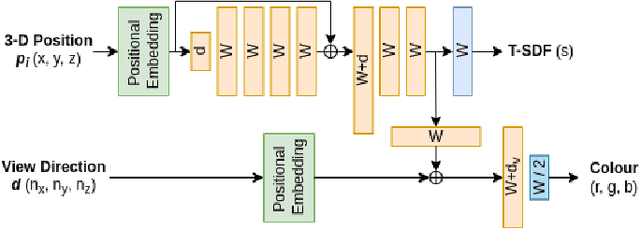

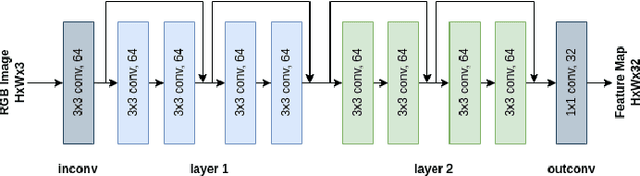

We propose a novel end-to-end RGB-D SLAM, iDF-SLAM, which adopts a feature-based deep neural tracker as the front-end and a NeRF-style neural implicit mapper as the back-end. The neural implicit mapper is trained on-the-fly, while though the neural tracker is pretrained on the ScanNet dataset, it is also finetuned along with the training of the neural implicit mapper. Under such a design, our iDF-SLAM is capable of learning to use scene-specific features for camera tracking, thus enabling lifelong learning of the SLAM system. Both the training for the tracker and the mapper are self-supervised without introducing ground truth poses. We test the performance of our iDF-SLAM on the Replica and ScanNet datasets and compare the results to the two recent NeRF-based neural SLAM systems. The proposed iDF-SLAM demonstrates state-of-the-art results in terms of scene reconstruction and competitive performance in camera tracking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge