Hyperdimensional Computing Empowered Federated Foundation Model over Wireless Networks for Metaverse

Paper and Code

Aug 26, 2024

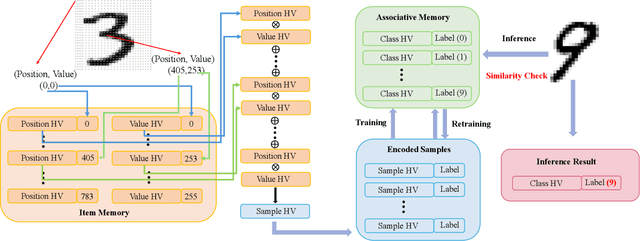

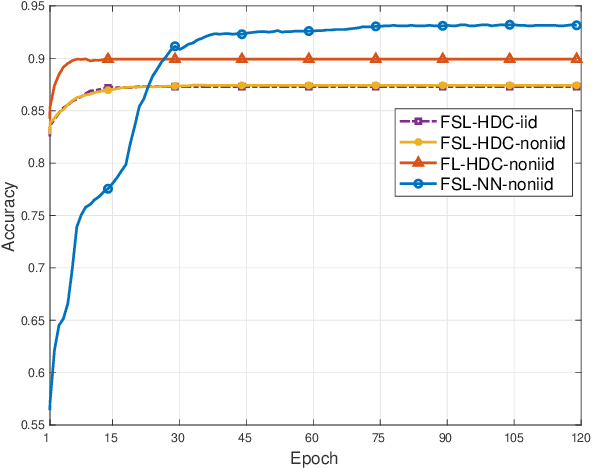

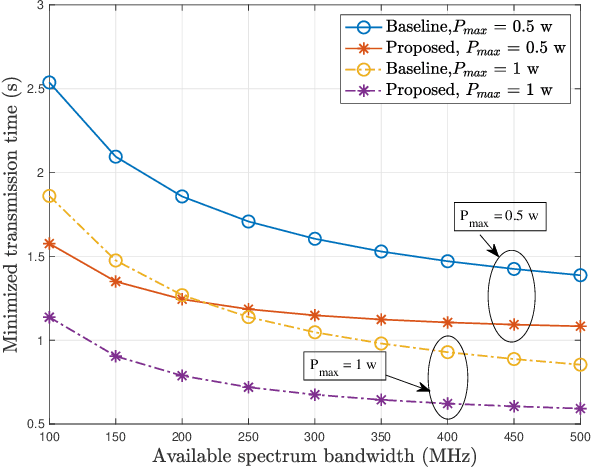

The Metaverse, a burgeoning collective virtual space merging augmented reality and persistent virtual worlds, necessitates advanced artificial intelligence (AI) and communication technologies to support immersive and interactive experiences. Federated learning (FL) has emerged as a promising technique for collaboratively training AI models while preserving data privacy. However, FL faces challenges such as high communication overhead and substantial computational demands, particularly for neural network (NN) models. To address these issues, we propose an integrated federated split learning and hyperdimensional computing (FSL-HDC) framework for emerging foundation models. This novel approach reduces communication costs, computation load, and privacy risks, making it particularly suitable for resource-constrained edge devices in the Metaverse, ensuring real-time responsive interactions. Additionally, we introduce an optimization algorithm that concurrently optimizes transmission power and bandwidth to minimize the maximum transmission time among all users to the server. The simulation results based on the MNIST dataset indicate that FSL-HDC achieves an accuracy rate of approximately 87.5%, which is slightly lower than that of FL-HDC. However, FSL-HDC exhibits a significantly faster convergence speed, approximately 3.733x that of FSL-NN, and demonstrates robustness to non-IID data distributions. Moreover, our proposed optimization algorithm can reduce the maximum transmission time by up to 64% compared with the baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge