Hyp-RL : Hyperparameter Optimization by Reinforcement Learning

Paper and Code

Jun 27, 2019

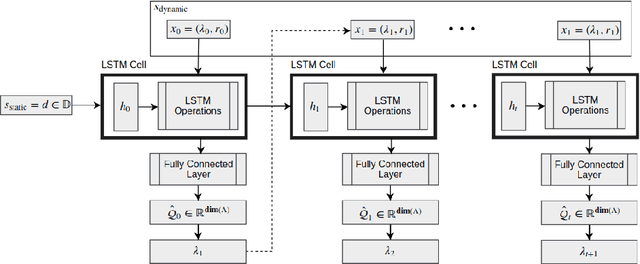

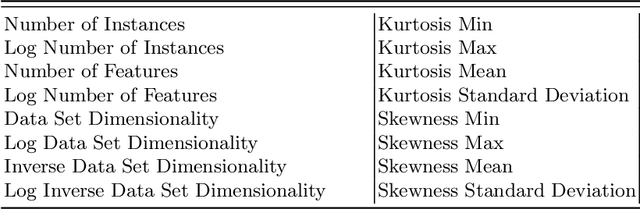

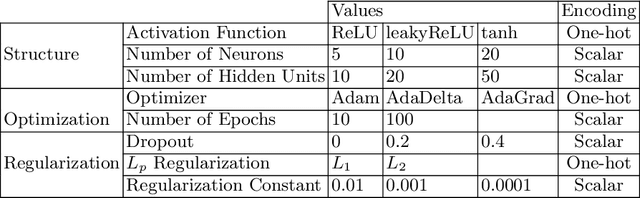

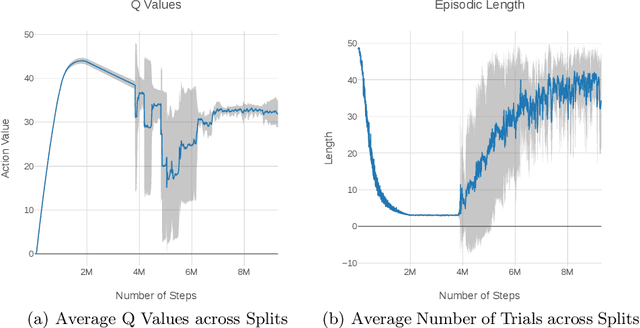

Hyperparameter tuning is an omnipresent problem in machine learning as it is an integral aspect of obtaining the state-of-the-art performance for any model. Most often, hyperparameters are optimized just by training a model on a grid of possible hyperparameter values and taking the one that performs best on a validation sample (grid search). More recently, methods have been introduced that build a so-called surrogate model that predicts the validation loss for a specific hyperparameter setting, model and dataset and then sequentially select the next hyperparameter to test, based on a heuristic function of the expected value and the uncertainty of the surrogate model called acquisition function (sequential model-based Bayesian optimization, SMBO). In this paper we model the hyperparameter optimization problem as a sequential decision problem, which hyperparameter to test next, and address it with reinforcement learning. This way our model does not have to rely on a heuristic acquisition function like SMBO, but can learn which hyperparameters to test next based on the subsequent reduction in validation loss they will eventually lead to, either because they yield good models themselves or because they allow the hyperparameter selection policy to build a better surrogate model that is able to choose better hyperparameters later on. Experiments on a large battery of 50 data sets demonstrate that our method outperforms the state-of-the-art approaches for hyperparameter learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge