Human Body Model based ID using Shape and Pose Parameters

Paper and Code

Dec 06, 2023

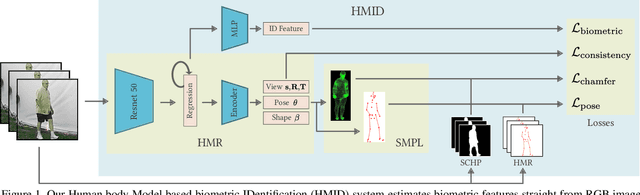

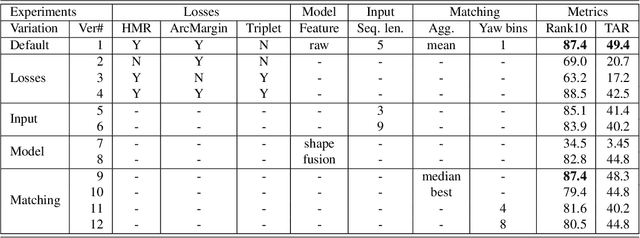

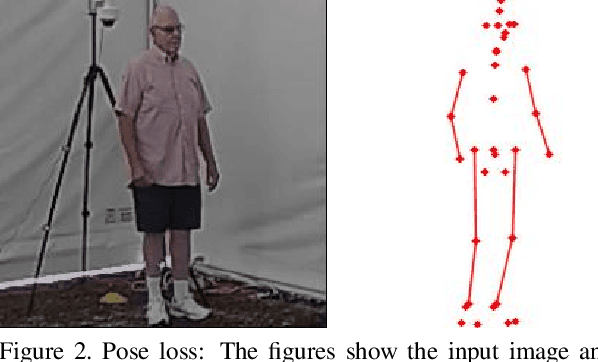

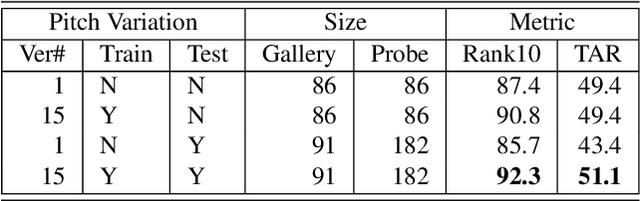

We present a Human Body model based IDentification system (HMID) system that is jointly trained for shape, pose and biometric identification. HMID is based on the Human Mesh Recovery (HMR) network and we propose additional losses to improve and stabilize shape estimation and biometric identification while maintaining the pose and shape output. We show that when our HMID network is trained using additional shape and pose losses, it shows a significant improvement in biometric identification performance when compared to an identical model that does not use such losses. The HMID model uses raw images instead of silhouettes and is able to perform robust recognition on images collected at range and altitude as many anthropometric properties are reasonably invariant to clothing, view and range. We show results on the USF dataset as well as the BRIAR dataset which includes probes with both clothing and view changes. Our approach (using body model losses) shows a significant improvement in Rank20 accuracy and True Accuracy Rate on the BRIAR evaluation dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge