HRDNet: High-resolution Detection Network for Small Objects

Paper and Code

Jun 13, 2020

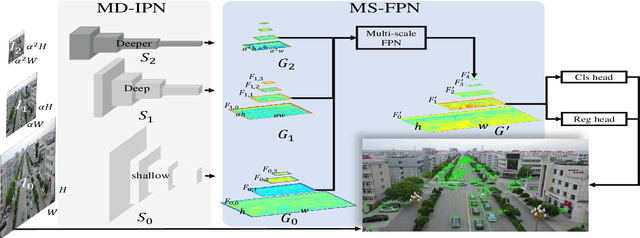

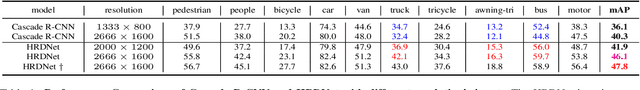

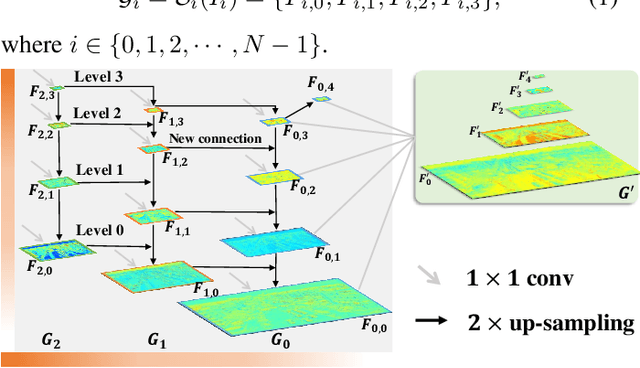

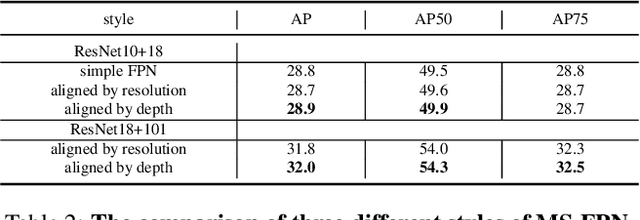

Small object detection is challenging because small objects do not contain detailed information and may even disappear in the deep network. Usually, feeding high-resolution images into a network can alleviate this issue. However, simply enlarging the resolution will cause more problems, such as that, it aggravates the large variant of object scale and introduces unbearable computation cost. To keep the benefits of high-resolution images without bringing up new problems, we proposed the High-Resolution Detection Network (HRDNet). HRDNet takes multiple resolution inputs using multi-depth backbones. To fully take advantage of multiple features, we proposed Multi-Depth Image Pyramid Network (MD-IPN) and Multi-Scale Feature Pyramid Network (MS-FPN) in HRDNet. MD-IPN maintains multiple position information using multiple depth backbones. Specifically, high-resolution input will be fed into a shallow network to reserve more positional information and reducing the computational cost while low-resolution input will be fed into a deep network to extract more semantics. By extracting various features from high to low resolutions, the MD-IPN is able to improve the performance of small object detection as well as maintaining the performance of middle and large objects. MS-FPN is proposed to align and fuse multi-scale feature groups generated by MD-IPN to reduce the information imbalance between these multi-scale multi-level features. Extensive experiments and ablation studies are conducted on the standard benchmark dataset MS COCO2017, Pascal VOC2007/2012 and a typical small object dataset, VisDrone 2019. Notably, our proposed HRDNet achieves the state-of-the-art on these datasets and it performs better on small objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge