HiFi++: a Unified Framework for Neural Vocoding, Bandwidth Extension and Speech Enhancement

Paper and Code

Mar 24, 2022

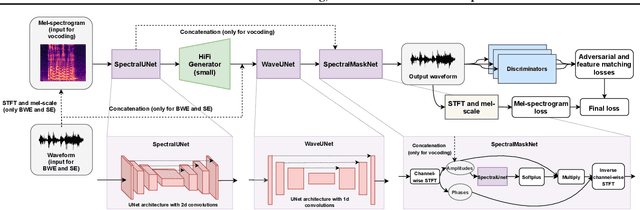

Generative adversarial networks have recently demonstrated outstanding performance in neural vocoding outperforming best autoregressive and flow-based models. In this paper, we show that this success can be extended to other tasks of conditional audio generation. In particular, building upon HiFi vocoders, we propose a novel HiFi++ general framework for neural vocoding, bandwidth extension, and speech enhancement. We show that with the improved generator architecture and simplified multi-discriminator training, HiFi++ performs on par with the state-of-the-art in these tasks while spending significantly less memory and computational resources. The effectiveness of our approach is validated through a series of extensive experiments.

* Preprint

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge