Hierarchical Video Generation for Complex Data

Paper and Code

Jun 04, 2021

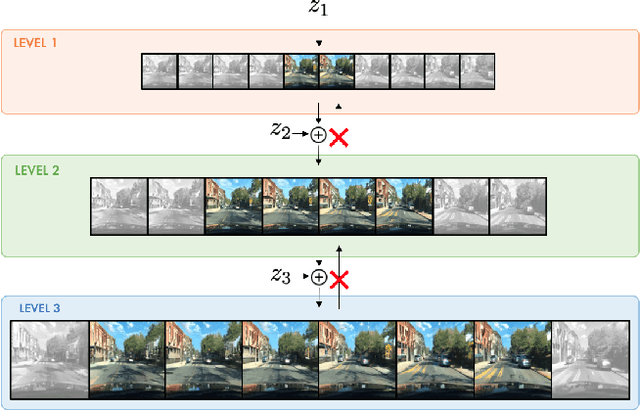

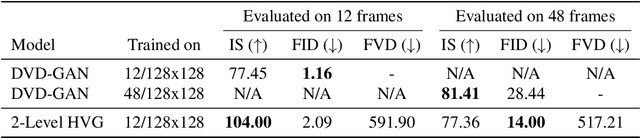

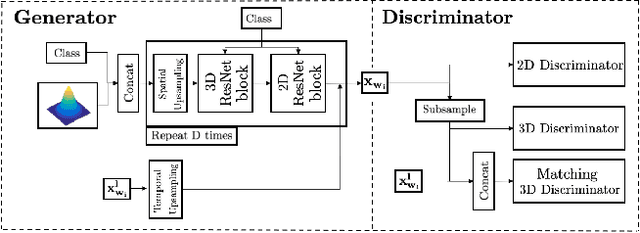

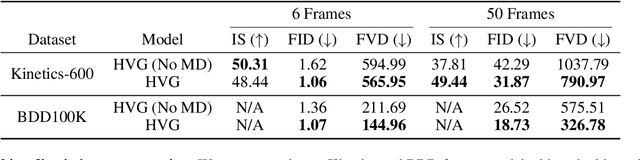

Videos can often be created by first outlining a global description of the scene and then adding local details. Inspired by this we propose a hierarchical model for video generation which follows a coarse to fine approach. First our model generates a low resolution video, establishing the global scene structure, that is then refined by subsequent levels in the hierarchy. We train each level in our hierarchy sequentially on partial views of the videos. This reduces the computational complexity of our generative model, which scales to high-resolution videos beyond a few frames. We validate our approach on Kinetics-600 and BDD100K, for which we train a three level model capable of generating 256x256 videos with 48 frames.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge