Hierarchical Ranking for Answer Selection

Paper and Code

Feb 01, 2021

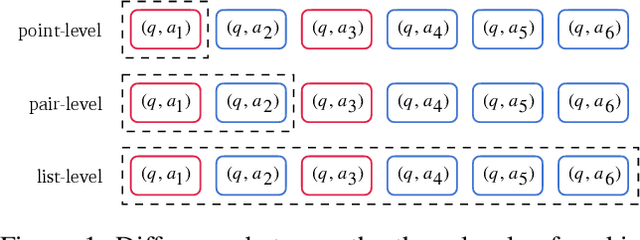

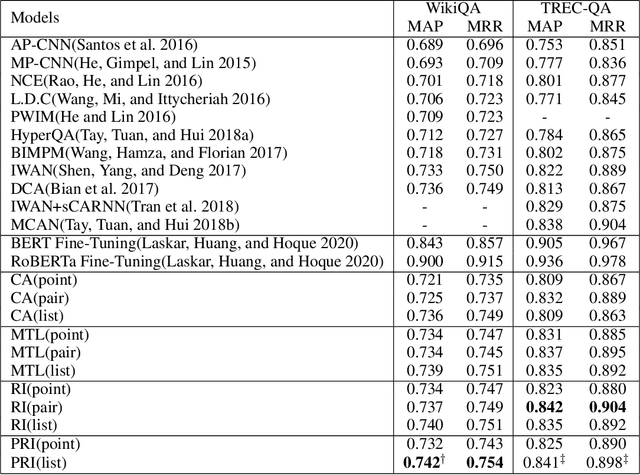

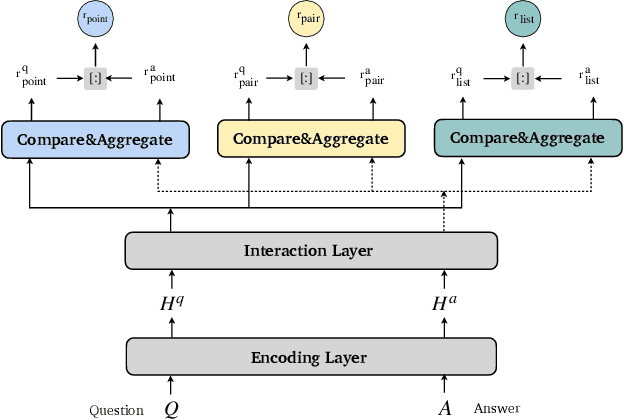

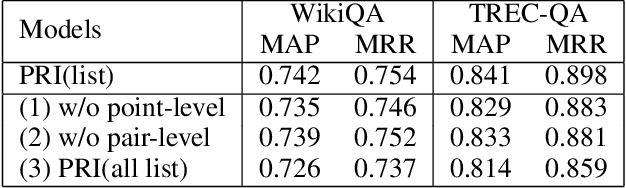

Answer selection is a task to choose the positive answers from a pool of candidate answers for a given question. In this paper, we propose a novel strategy for answer selection, called hierarchical ranking. We introduce three levels of ranking: point-level ranking, pair-level ranking, and list-level ranking. They formulate their optimization objectives by employing supervisory information from different perspectives to achieve the same goal of ranking candidate answers. Therefore, the three levels of ranking are related and they can promote each other. We take the well-performed compare-aggregate model as the backbone and explore three schemes to implement the idea of applying the hierarchical rankings jointly: the scheme under the Multi-Task Learning (MTL) strategy, the Ranking Integration (RI) scheme, and the Progressive Ranking Integration (PRI) scheme. Experimental results on two public datasets, WikiQA and TREC-QA, demonstrate that the proposed hierarchical ranking is effective. Our method achieves state-of-the-art (non-BERT) performance on both TREC-QA and WikiQA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge