Heterogeneous Learning in Zero-Sum Stochastic Games with Incomplete Information

Paper and Code

Mar 13, 2011

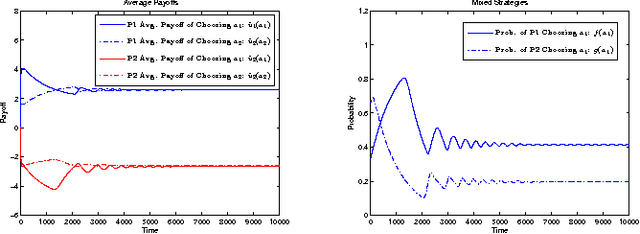

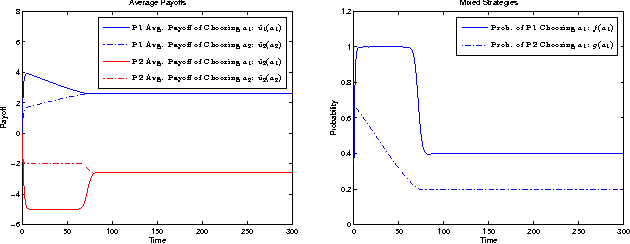

Learning algorithms are essential for the applications of game theory in a networking environment. In dynamic and decentralized settings where the traffic, topology and channel states may vary over time and the communication between agents is impractical, it is important to formulate and study games of incomplete information and fully distributed learning algorithms which for each agent requires a minimal amount of information regarding the remaining agents. In this paper, we address this major challenge and introduce heterogeneous learning schemes in which each agent adopts a distinct learning pattern in the context of games with incomplete information. We use stochastic approximation techniques to show that the heterogeneous learning schemes can be studied in terms of their deterministic ordinary differential equation (ODE) counterparts. Depending on the learning rates of the players, these ODEs could be different from the standard replicator dynamics, (myopic) best response (BR) dynamics, logit dynamics, and fictitious play dynamics. We apply the results to a class of security games in which the attacker and the defender adopt different learning schemes due to differences in their rationality levels and the information they acquire.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge