HAN: Higher-order Attention Network for Spoken Language Understanding

Paper and Code

Aug 26, 2021

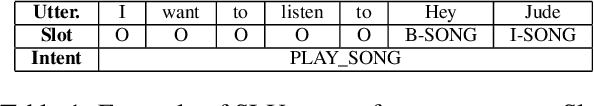

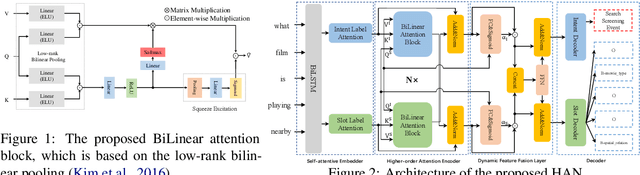

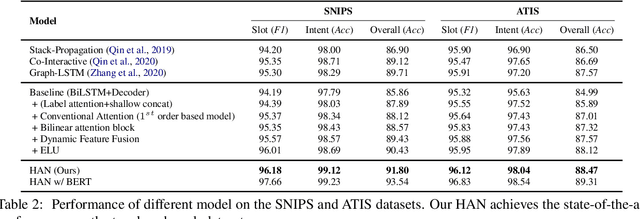

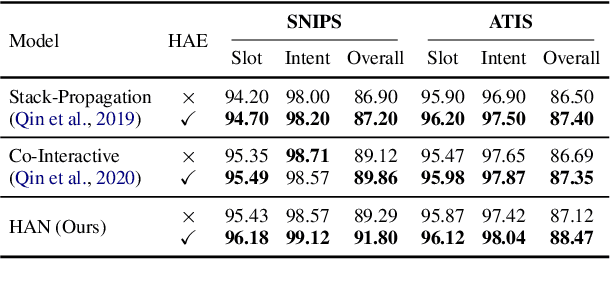

Spoken Language Understanding (SLU), including intent detection and slot filling, is a core component in human-computer interaction. The natural attributes of the relationship among the two subtasks make higher requirements on fine-grained feature interaction, i.e., the token-level intent features and slot features. Previous works mainly focus on jointly modeling the relationship between the two subtasks with attention-based models, while ignoring the exploration of attention order. In this paper, we propose to replace the conventional attention with our proposed Bilinear attention block and show that the introduced Higher-order Attention Network (HAN) brings improvement for the SLU task. Importantly, we conduct wide analysis to explore the effectiveness brought from the higher-order attention.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge