Group Regularized Estimation under Structural Hierarchy

Paper and Code

Nov 08, 2016

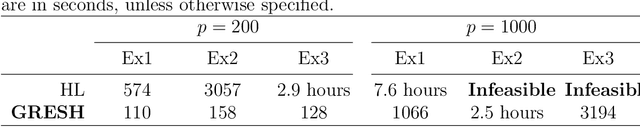

Variable selection for models including interactions between explanatory variables often needs to obey certain hierarchical constraints. The weak or strong structural hierarchy requires that the existence of an interaction term implies at least one or both associated main effects to be present in the model. Lately, this problem has attracted a lot of attention, but existing computational algorithms converge slow even with a moderate number of predictors. Moreover, in contrast to the rich literature on ordinary variable selection, there is a lack of statistical theory to show reasonably low error rates of hierarchical variable selection. This work investigates a new class of estimators that make use of multiple group penalties to capture structural parsimony. We give the minimax lower bounds for strong and weak hierarchical variable selection and show that the proposed estimators enjoy sharp rate oracle inequalities. A general-purpose algorithm is developed with guaranteed convergence and global optimality. Simulations and real data experiments demonstrate the efficiency and efficacy of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge