Grasp Type Estimation for Myoelectric Prostheses using Point Cloud Feature Learning

Paper and Code

Aug 07, 2019

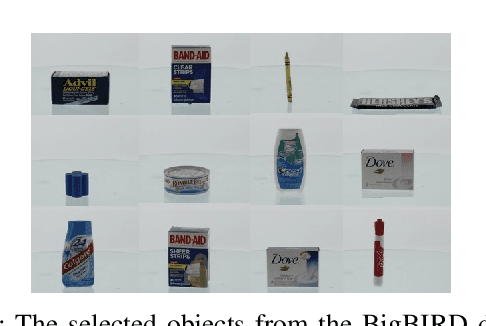

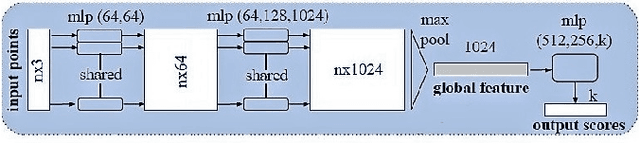

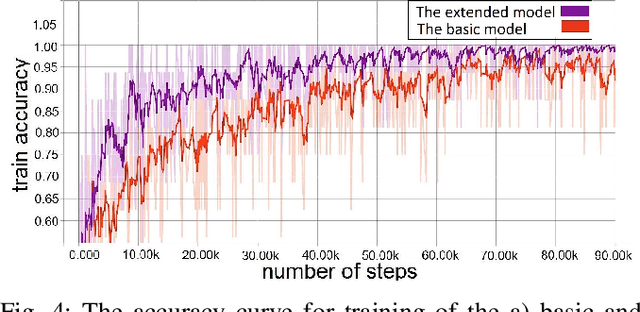

Prosthetic hands can help people with limb difference to return to their life routines. Commercial prostheses, however have several limitations in providing an acceptable dexterity. We approach these limitations by augmenting the prosthetic hands with an off-the-shelf depth sensor to enable the prosthesis to see the object's depth, record a single view (2.5-D) snapshot, and estimate an appropriate grasp type; using a deep network architecture based on 3D point clouds called PointNet. The human can act as the supervisor throughout the procedure by accepting or refusing the suggested grasp type. We achieved the grasp classification accuracy of up to 88%. Contrary to the case of the RGB data, the depth data provides all the necessary object shape information, which is required for grasp recognition. The PointNet not only enables using 3-D data in practice, but it also prevents excessive computations. Augmentation of the prosthetic hands with such a semi-autonomous system can lead to better differentiation of grasp types, less burden on user, and better performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge