Grasp Pre-shape Selection by Synthetic Training: Eye-in-hand Shared Control on the Hannes Prosthesis

Paper and Code

Mar 18, 2022

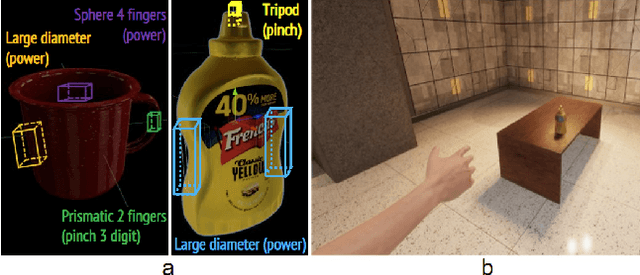

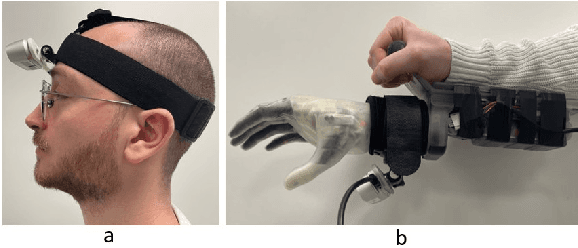

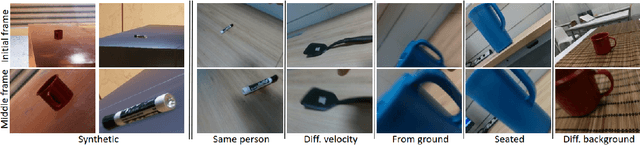

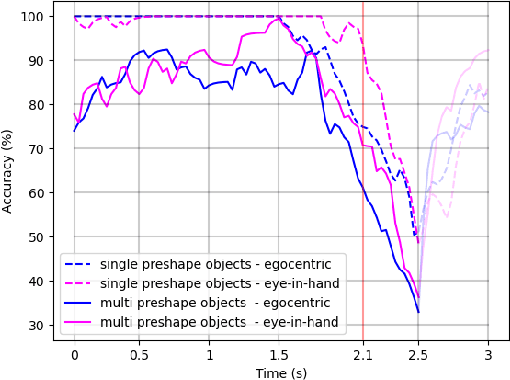

We consider the task of object grasping with a prosthetic hand capable of multiple grasp types. In this setting, communicating the intended grasp type often requires a high user cognitive load which can be reduced adopting shared autonomy frameworks. Among these, so-called eye-in-hand systems automatically control the hand aperture and pre-shaping before the grasp, based on visual input coming from a camera on the wrist. In this work, we present an eye-in-hand learning-based approach for hand pre-shape classification from RGB sequences. In order to reduce the need for tedious data collection sessions for training the system, we devise a pipeline for rendering synthetic visual sequences of hand trajectories for the purpose. We tackle the peculiarity of the eye-in-hand setting by means of a model for the human arm trajectories, with domain randomization over relevant visual elements. We develop a sensorized setup to acquire real human grasping sequences for benchmarking and show that, compared on practical use cases, models trained with our synthetic dataset achieve better generalization performance than models trained on real data. We finally integrate our model on the Hannes prosthetic hand and show its practical effectiveness. Our code, real and synthetic datasets will be released upon acceptance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge