GraphPoseGAN: 3D Hand Pose Estimation from a Monocular RGB Image via Adversarial Learning on Graphs

Paper and Code

Dec 04, 2019

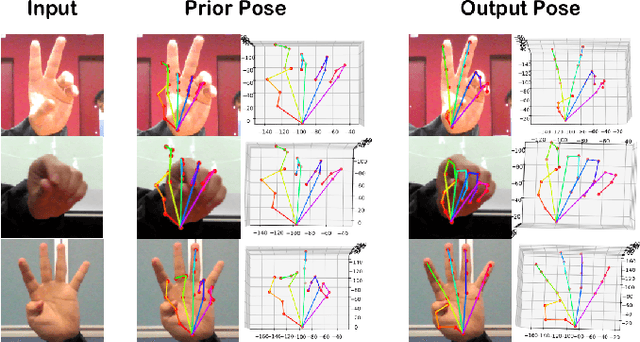

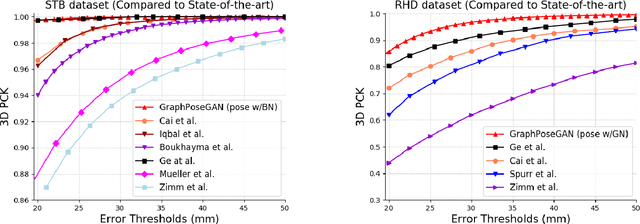

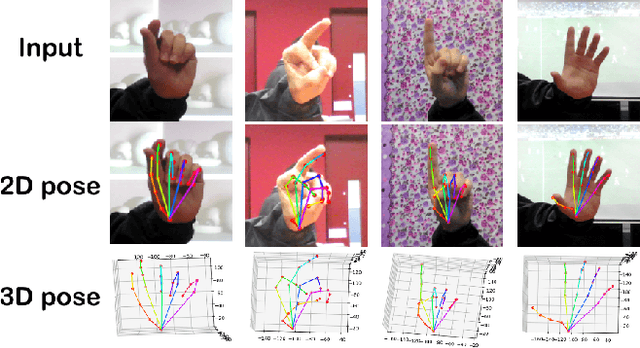

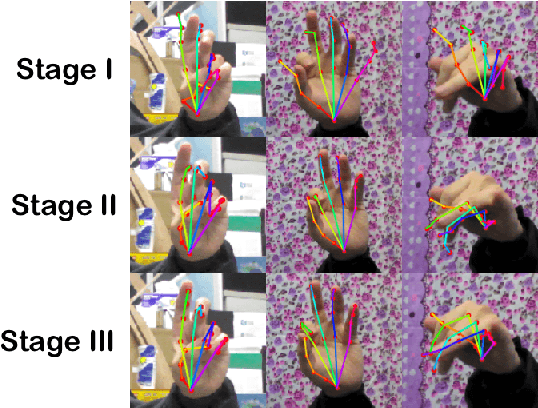

This paper addresses the problem of 3D hand pose estimation from a monocular RGB image. We are the first to propose a graph-based generative adversarial learning framework regularized by a hand model, aiming at realistic 3D hand pose estimation. Our model consists of a 3D hand pose generator and a multi-source discriminator. Taking one monocular RGB image as the input, the generator is essentially a residual graph convolution module with a parametric deformable hand model as prior for pose refinement. Further, we design a multi-source discriminator with hand poses, bones and the input image as input to capture intrinsic features, which distinguishes the predicted 3D hand pose from the ground-truth and leads to anthropomorphically valid hand poses. In addition, we propose two novel bone-constrained loss functions, which characterize the morphable structure of hand poses explicitly. Extensive experiments demonstrate that our model sets new state-of-the-art performances in 3D hand pose estimation from a monocular image on standard benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge