GraphMETRO: Mitigating Complex Distribution Shifts in GNNs via Mixture of Aligned Experts

Paper and Code

Dec 07, 2023

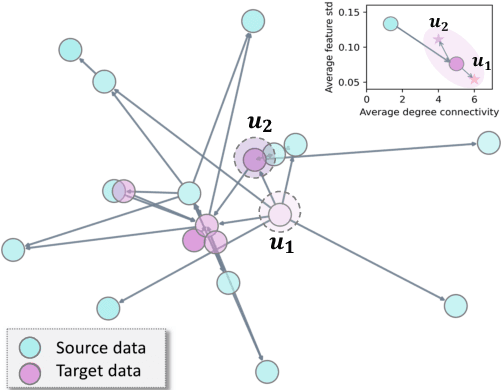

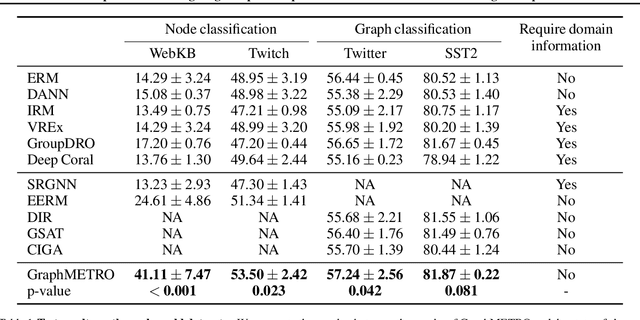

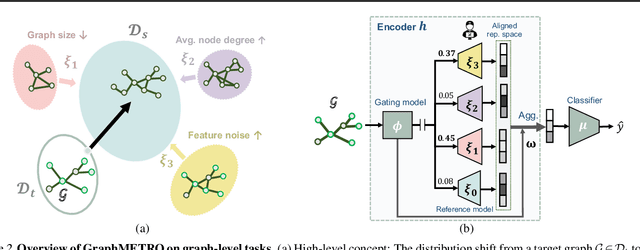

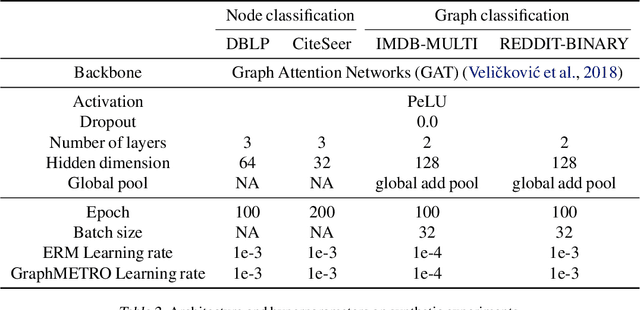

Graph Neural Networks' (GNNs) ability to generalize across complex distributions is crucial for real-world applications. However, prior research has primarily focused on specific types of distribution shifts, such as larger graph size, or inferred shifts from constructed data environments, which is highly limited when confronted with multiple and nuanced distribution shifts. For instance, in a social graph, a user node might experience increased interactions and content alterations, while other user nodes encounter distinct shifts. Neglecting such complexities significantly impedes generalization. To address it, we present GraphMETRO, a novel framework that enhances GNN generalization under complex distribution shifts in both node and graph-level tasks. Our approach employs a mixture-of-experts (MoE) architecture with a gating model and expert models aligned in a shared representation space. The gating model identifies key mixture components governing distribution shifts, while each expert generates invariant representations w.r.t. a mixture component. Finally, GraphMETRO aggregates representations from multiple experts to generate the final invariant representation. Our experiments on synthetic and realworld datasets demonstrate GraphMETRO's superiority and interpretability. To highlight, GraphMETRO achieves state-of-the-art performances on four real-world datasets from GOOD benchmark, outperforming the best baselines on WebKB and Twitch datasets by 67% and 4.2%, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge