Graph Value Iteration

Paper and Code

Sep 20, 2022

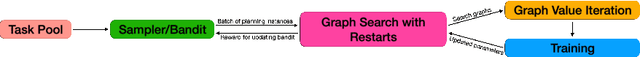

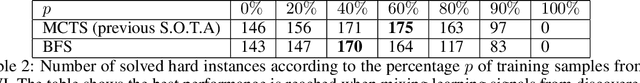

In recent years, deep Reinforcement Learning (RL) has been successful in various combinatorial search domains, such as two-player games and scientific discovery. However, directly applying deep RL in planning domains is still challenging. One major difficulty is that without a human-crafted heuristic function, reward signals remain zero unless the learning framework discovers any solution plan. Search space becomes \emph{exponentially larger} as the minimum length of plans grows, which is a serious limitation for planning instances with a minimum plan length of hundreds to thousands of steps. Previous learning frameworks that augment graph search with deep neural networks and extra generated subgoals have achieved success in various challenging planning domains. However, generating useful subgoals requires extensive domain knowledge. We propose a domain-independent method that augments graph search with graph value iteration to solve hard planning instances that are out of reach for domain-specialized solvers. In particular, instead of receiving learning signals only from discovered plans, our approach also learns from failed search attempts where no goal state has been reached. The graph value iteration component can exploit the graph structure of local search space and provide more informative learning signals. We also show how we use a curriculum strategy to smooth the learning process and perform a full analysis of how graph value iteration scales and enables learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge