Graph Tensor Networks: An Intuitive Framework for Designing Large-Scale Neural Learning Systems on Multiple Domains

Paper and Code

Mar 23, 2023

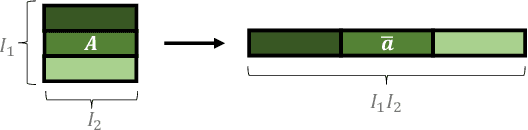

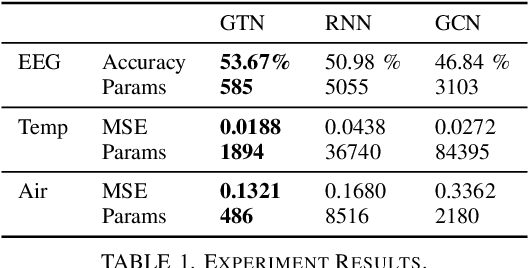

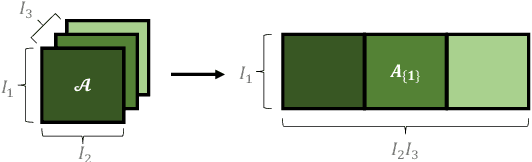

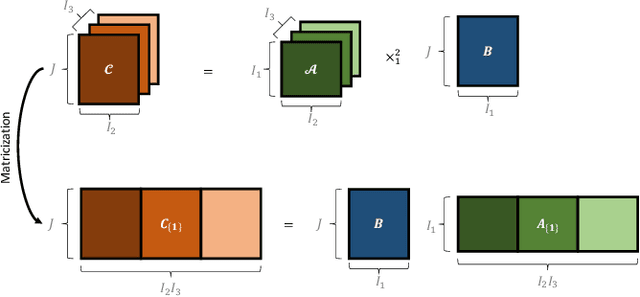

Despite the omnipresence of tensors and tensor operations in modern deep learning, the use of tensor mathematics to formally design and describe neural networks is still under-explored within the deep learning community. To this end, we introduce the Graph Tensor Network (GTN) framework, an intuitive yet rigorous graphical framework for systematically designing and implementing large-scale neural learning systems on both regular and irregular domains. The proposed framework is shown to be general enough to include many popular architectures as special cases, and flexible enough to handle data on any and many data domains. The power and flexibility of the proposed framework is demonstrated through real-data experiments, resulting in improved performance at a drastically lower complexity costs, by virtue of tensor algebra.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge