Graph Fusion Network for Multi-Oriented Object Detection

Paper and Code

May 07, 2022

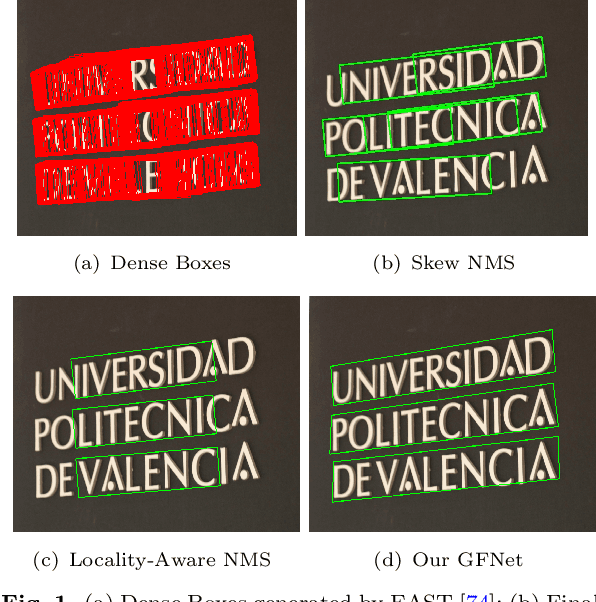

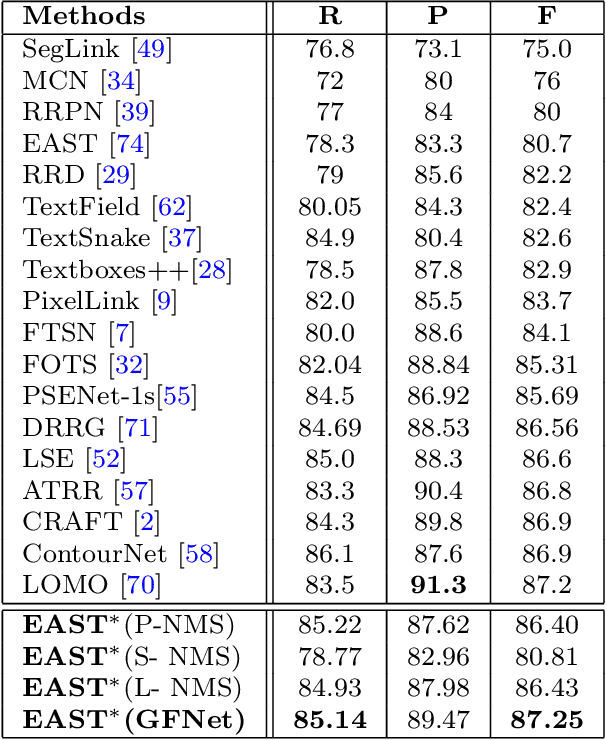

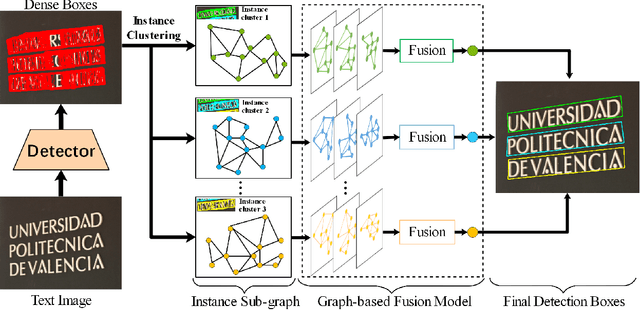

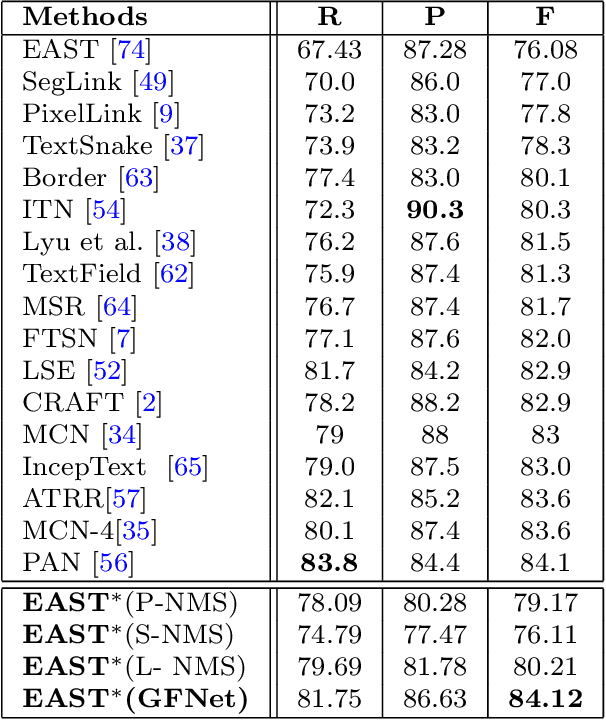

In object detection, non-maximum suppression (NMS) methods are extensively adopted to remove horizontal duplicates of detected dense boxes for generating final object instances. However, due to the degraded quality of dense detection boxes and not explicit exploration of the context information, existing NMS methods via simple intersection-over-union (IoU) metrics tend to underperform on multi-oriented and long-size objects detection. Distinguishing with general NMS methods via duplicate removal, we propose a novel graph fusion network, named GFNet, for multi-oriented object detection. Our GFNet is extensible and adaptively fuse dense detection boxes to detect more accurate and holistic multi-oriented object instances. Specifically, we first adopt a locality-aware clustering algorithm to group dense detection boxes into different clusters. We will construct an instance sub-graph for the detection boxes belonging to one cluster. Then, we propose a graph-based fusion network via Graph Convolutional Network (GCN) to learn to reason and fuse the detection boxes for generating final instance boxes. Extensive experiments both on public available multi-oriented text datasets (including MSRA-TD500, ICDAR2015, ICDAR2017-MLT) and multi-oriented object datasets (DOTA) verify the effectiveness and robustness of our method against general NMS methods in multi-oriented object detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge