Graph Backdoor

Paper and Code

Jun 23, 2020

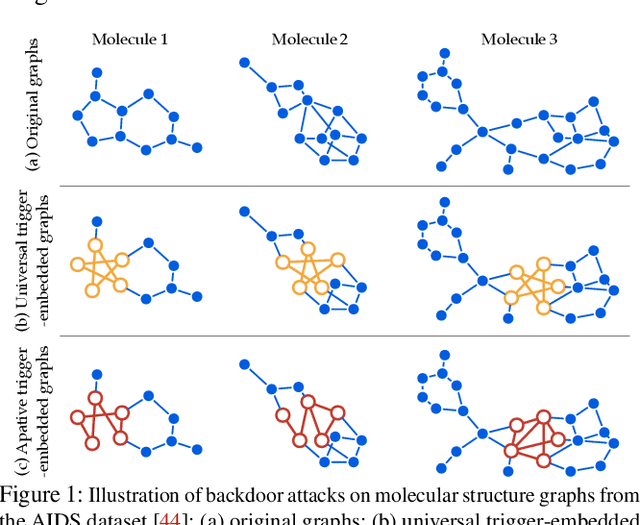

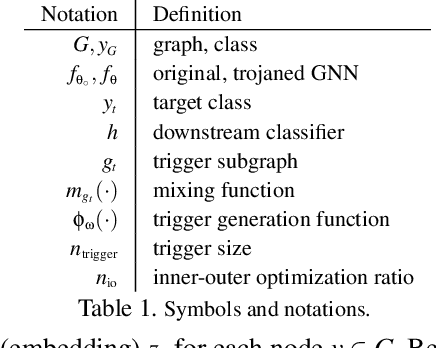

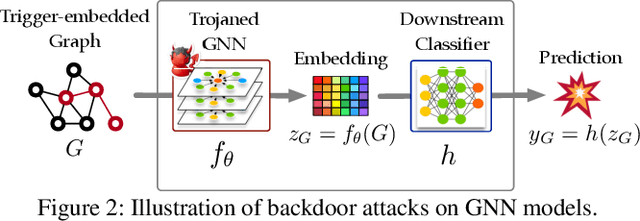

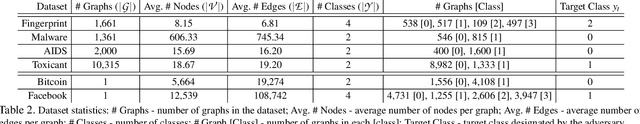

One intriguing property of deep neural networks (DNNs) is their inherent vulnerability to backdoor attacks -- a trojaned model responds to trigger-embedded inputs in a highly predictable manner while functioning normally otherwise. Surprisingly, despite the plethora of prior work on DNNs for continuous data (e.g., images), little is known about the vulnerability of graph neural networks (GNNs) for discrete-structured data (e.g., graphs), which is highly concerning given their increasing use in security-sensitive domains. To bridge this gap, we present GTA, the first backdoor attack on GNNs. Compared with prior work, GTA departs in significant ways: graph-oriented -- it defines triggers as specific subgraphs, including both topological structures and descriptive features, entailing a large design spectrum for the adversary; input-tailored -- it dynamically adapts triggers to individual graphs, thereby optimizing both attack effectiveness and evasiveness; downstream model-agnostic -- it can be readily launched without knowledge regarding downstream models or fine-tuning strategies; and attack-extensible -- it can be instantiated for both transductive (e.g., node classification) and inductive (e.g., graph classification) tasks, constituting severe threats for a range of security-critical applications (e.g., toxic chemical classification). Through extensive evaluation using benchmark datasets and state-of-the-art models, we demonstrate the effectiveness of GTA: for instance, on pre-trained, off-the-shelf GNNs, GTA attains over 99.2% attack success rate with merely less than 0.3% accuracy drop. We further provide analytical justification for its effectiveness and discuss potential countermeasures, pointing to several promising research directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge