Gradient Based Memory Editing for Task-Free Continual Learning

Paper and Code

Jun 27, 2020

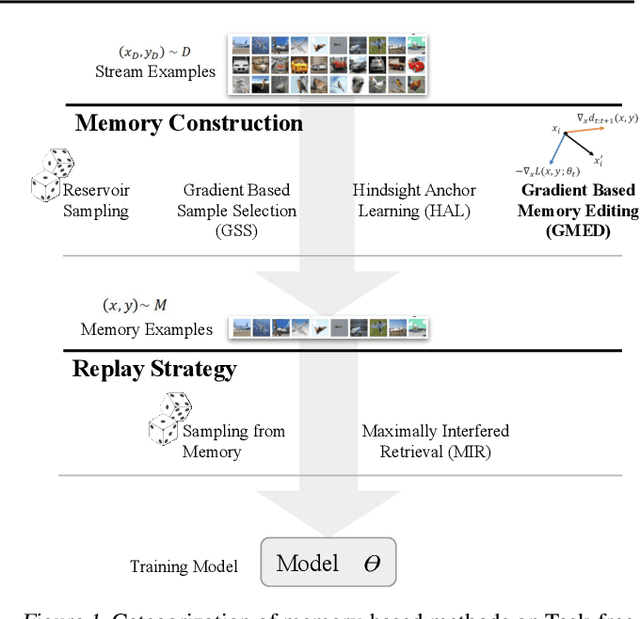

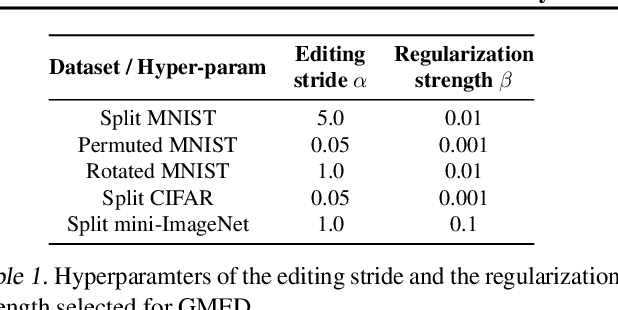

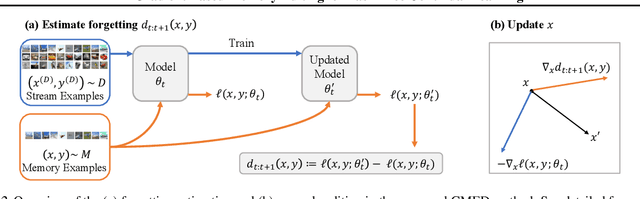

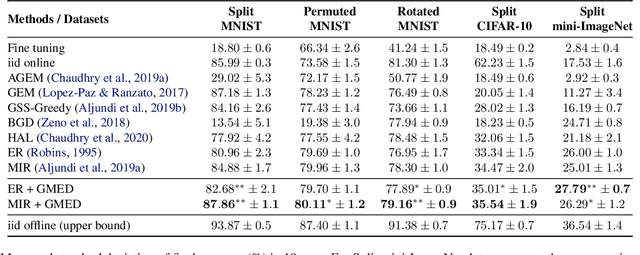

Prior work on continual learning often operate in a "task-aware" manner, by assuming that the task boundaries and identifies of the data instances are known at all times. While in practice, it is rarely the case that such information are exposed to the methods (i.e., thus called "task-free")--a setting that is relatively underexplored. Recent attempts on task-free continual learning build on previous memory replay methods and focus on developing memory management strategies such that model performance over priorly seen instances can be best retained. In this paper, looking from a complementary angle, we propose a principled approach to "edit" stored examples which aims to carry more updated information from the data stream in the memory. We use gradient updates to edit stored examples so that they are more likely to be forgotten in future updates. Experiments on five benchmark datasets show the proposed method can be seamlessly combined with baselines to significantly improve the performance. Code has been released at https://github.com/INK-USC/GMED.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge