giMLPs: Gate with Inhibition Mechanism in MLPs

Paper and Code

Aug 02, 2022

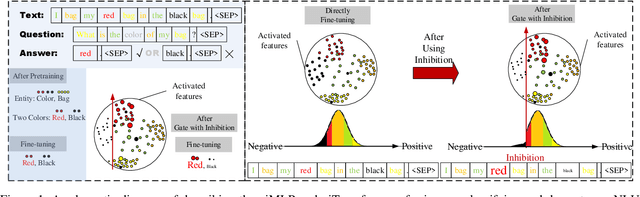

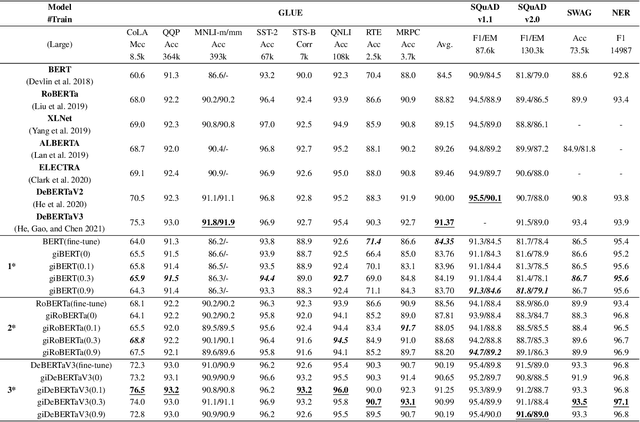

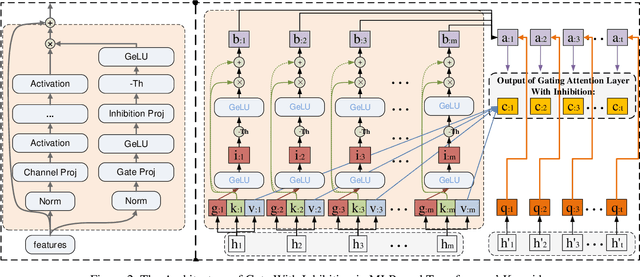

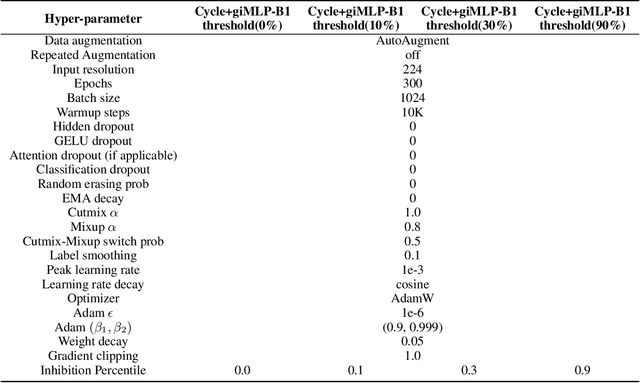

This paper presents a new model architecture, gate with inhibition MLP (giMLP).The gate with inhibition on CycleMLP (gi-CycleMLP) can produce equal performance on the ImageNet classification task, and it also improves the BERT, Roberta, and DeBERTaV3 models depending on two novel techniques. The first is the gating MLP, where matrix multiplications between the MLP and the trunk Attention input in further adjust models' adaptation. The second is inhibition which inhibits or enhances the branch adjustment, and with the inhibition levels increasing, it offers models more muscular features restriction. We show that the giCycleMLP with a lower inhibition level can be competitive with the original CycleMLP in terms of ImageNet classification accuracy. In addition, we also show through a comprehensive empirical study that these techniques significantly improve the performance of fine-tuning NLU downstream tasks. As for the gate with inhibition MLPs on DeBERTa (giDeBERTa) fine-tuning, we find it can achieve appealing results on most parts of NLU tasks without any extra pretraining again. We also find that with the use of Gate With Inhibition, the activation function should have a short and smooth negative tail, with which the unimportant features or the features that hurt models can be moderately inhibited. The experiments on ImageNet and twelve language downstream tasks demonstrate the effectiveness of Gate With Inhibition, both for image classification and for enhancing the capacity of nature language fine-tuning without any extra pretraining.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge