GIFT: Generated Indoor video frames for Texture-less point tracking

Paper and Code

Mar 17, 2025

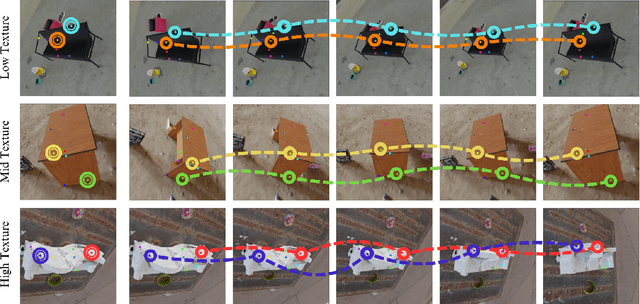

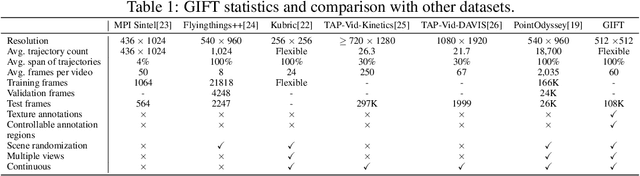

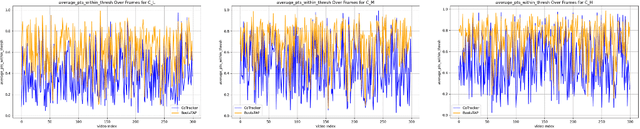

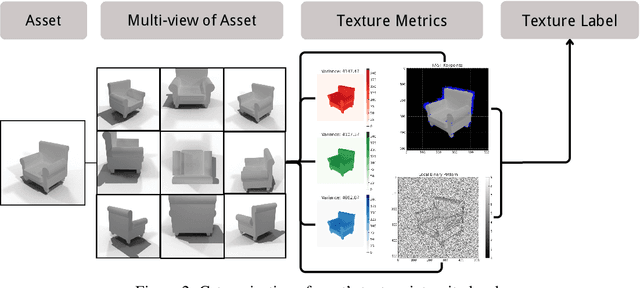

Point tracking is becoming a powerful solver for motion estimation and video editing. Compared to classical feature matching, point tracking methods have the key advantage of robustly tracking points under complex camera motion trajectories and over extended periods. However, despite certain improvements in methodologies, current point tracking methods still struggle to track any position in video frames, especially in areas that are texture-less or weakly textured. In this work, we first introduce metrics for evaluating the texture intensity of a 3D object. Using these metrics, we classify the 3D models in ShapeNet into three levels of texture intensity and create GIFT, a challenging synthetic benchmark comprising 1800 indoor video sequences with rich annotations. Unlike existing datasets that assign ground truth points arbitrarily, GIFT precisely anchors ground truth on classified target objects, ensuring that each video corresponds to a specific texture intensity level. Furthermore, we comprehensively evaluate current methods on GIFT to assess their performance across different texture intensity levels and analyze the impact of texture on point tracking.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge