GIFAIR-FL: An Approach for Group and Individual Fairness in Federated Learning

Paper and Code

Aug 05, 2021

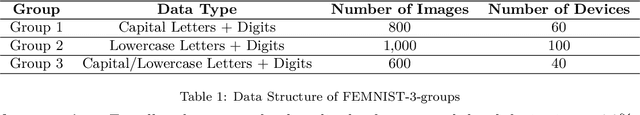

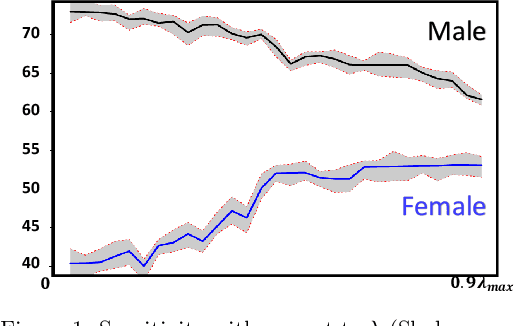

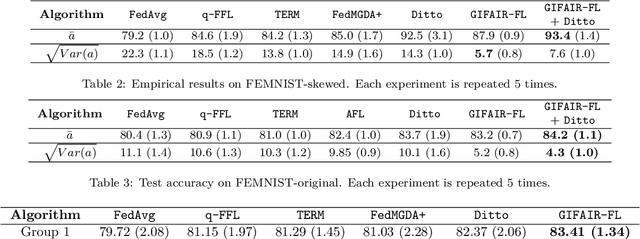

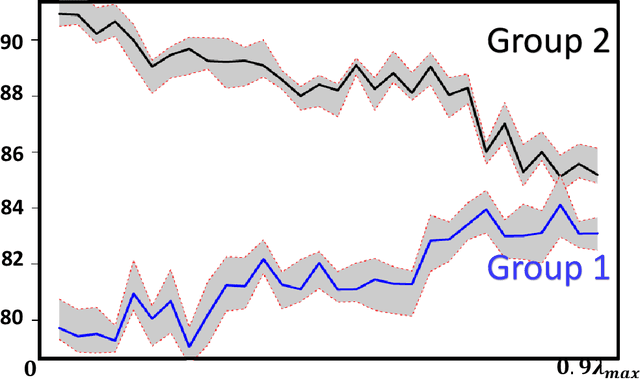

In this paper we propose \texttt{GIFAIR-FL}: an approach that imposes group and individual fairness to federated learning settings. By adding a regularization term, our algorithm penalizes the spread in the loss of client groups to drive the optimizer to fair solutions. Theoretically, we show convergence in non-convex and strongly convex settings. Our convergence guarantees hold for both $i.i.d.$ and non-$i.i.d.$ data. To demonstrate the empirical performance of our algorithm, we apply our method on image classification and text prediction tasks. Compared to existing algorithms, our method shows improved fairness results while retaining superior or similar prediction accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge