Generative and Pseudo-Relevant Feedback for Sparse, Dense and Learned Sparse Retrieval

Paper and Code

May 12, 2023

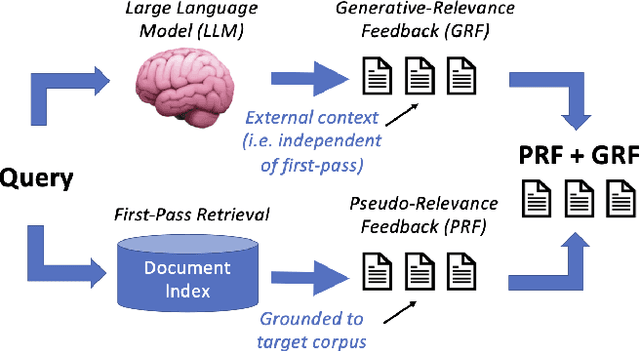

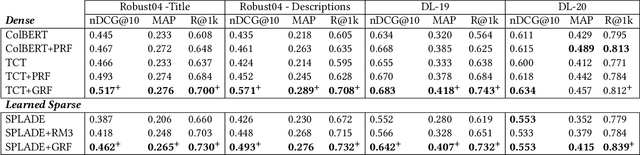

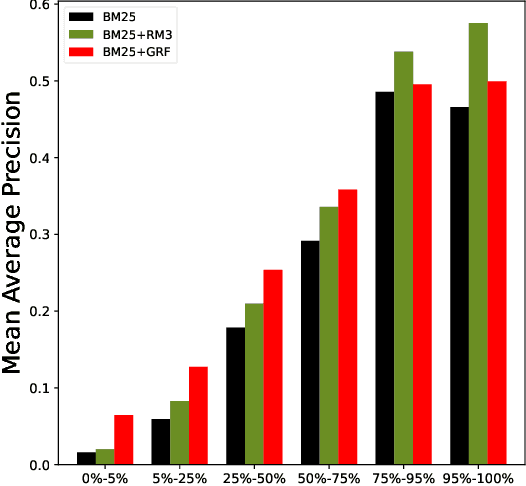

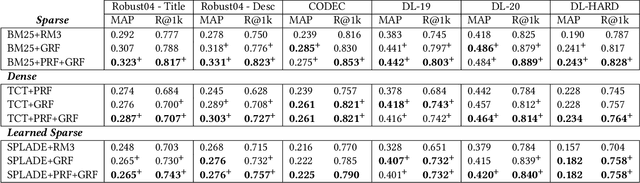

Pseudo-relevance feedback (PRF) is a classical approach to address lexical mismatch by enriching the query using first-pass retrieval. Moreover, recent work on generative-relevance feedback (GRF) shows that query expansion models using text generated from large language models can improve sparse retrieval without depending on first-pass retrieval effectiveness. This work extends GRF to dense and learned sparse retrieval paradigms with experiments over six standard document ranking benchmarks. We find that GRF improves over comparable PRF techniques by around 10% on both precision and recall-oriented measures. Nonetheless, query analysis shows that GRF and PRF have contrasting benefits, with GRF providing external context not present in first-pass retrieval, whereas PRF grounds the query to the information contained within the target corpus. Thus, we propose combining generative and pseudo-relevance feedback ranking signals to achieve the benefits of both feedback classes, which significantly increases recall over PRF methods on 95% of experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge