Generating personalized counterfactual interventions for algorithmic recourse by eliciting user preferences

Paper and Code

May 27, 2022

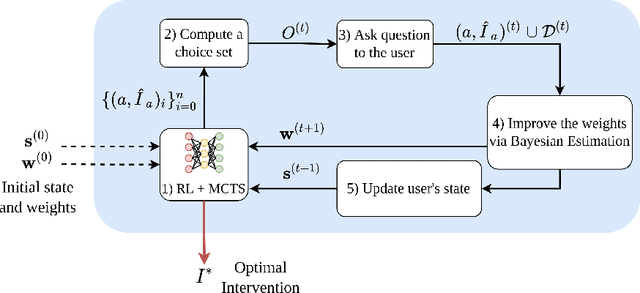

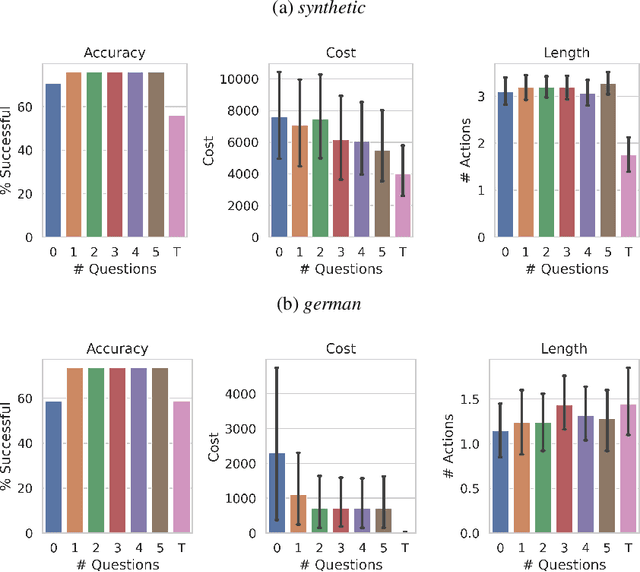

Counterfactual interventions are a powerful tool to explain the decisions of a black-box decision process, and to enable algorithmic recourse. They are a sequence of actions that, if performed by a user, can overturn an unfavourable decision made by an automated decision system. However, most of the current methods provide interventions without considering the user's preferences. For example, a user might prefer doing certain actions with respect to others. In this work, we present the first human-in-the-loop approach to perform algorithmic recourse by eliciting user preferences. We introduce a polynomial procedure to ask choice-set questions which maximize the Expected Utility of Selection (EUS), and use it to iteratively refine our cost estimates in a Bayesian setting. We integrate this preference elicitation strategy into a reinforcement learning agent coupled with Monte Carlo Tree Search for efficient exploration, so as to provide personalized interventions achieving algorithmic recourse. An experimental evaluation on synthetic and real-world datasets shows that a handful of queries allows to achieve a substantial reduction in the cost of interventions with respect to user-independent alternatives.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge