Generating Explanations in Medical Question-Answering by Expectation Maximization Inference over Evidence

Paper and Code

Oct 02, 2023

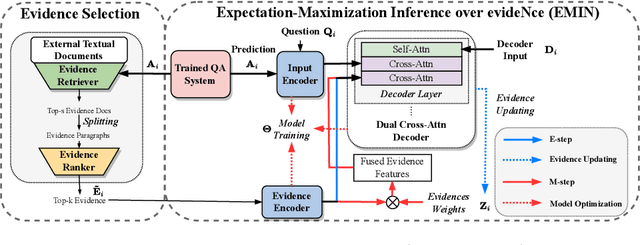

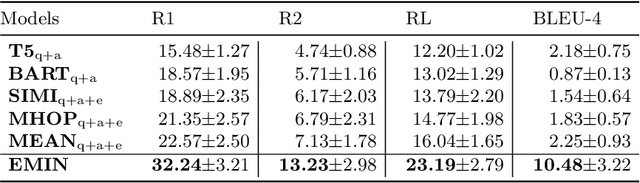

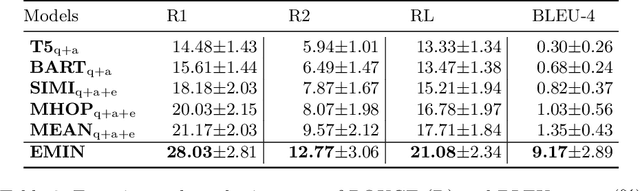

Medical Question Answering~(medical QA) systems play an essential role in assisting healthcare workers in finding answers to their questions. However, it is not sufficient to merely provide answers by medical QA systems because users might want explanations, that is, more analytic statements in natural language that describe the elements and context that support the answer. To do so, we propose a novel approach for generating natural language explanations for answers predicted by medical QA systems. As high-quality medical explanations require additional medical knowledge, so that our system extract knowledge from medical textbooks to enhance the quality of explanations during the explanation generation process. Concretely, we designed an expectation-maximization approach that makes inferences about the evidence found in these texts, offering an efficient way to focus attention on lengthy evidence passages. Experimental results, conducted on two datasets MQAE-diag and MQAE, demonstrate the effectiveness of our framework for reasoning with textual evidence. Our approach outperforms state-of-the-art models, achieving a significant improvement of \textbf{6.86} and \textbf{9.43} percentage points on the Rouge-1 score; \textbf{8.23} and \textbf{7.82} percentage points on the Bleu-4 score on the respective datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge