Generalized Exponentiated Gradient Algorithms and Their Application to On-Line Portfolio Selection

Paper and Code

Jun 02, 2024

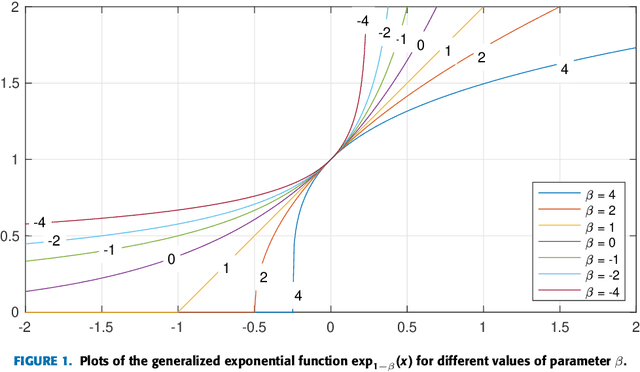

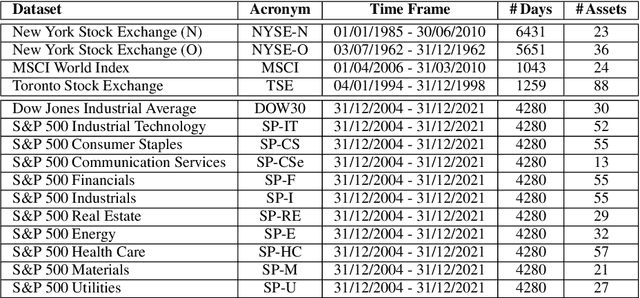

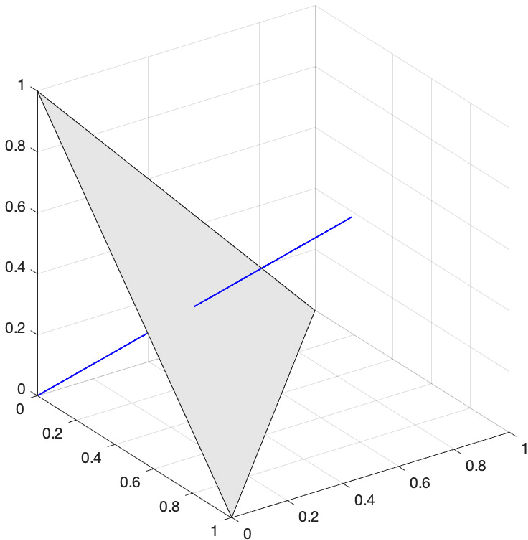

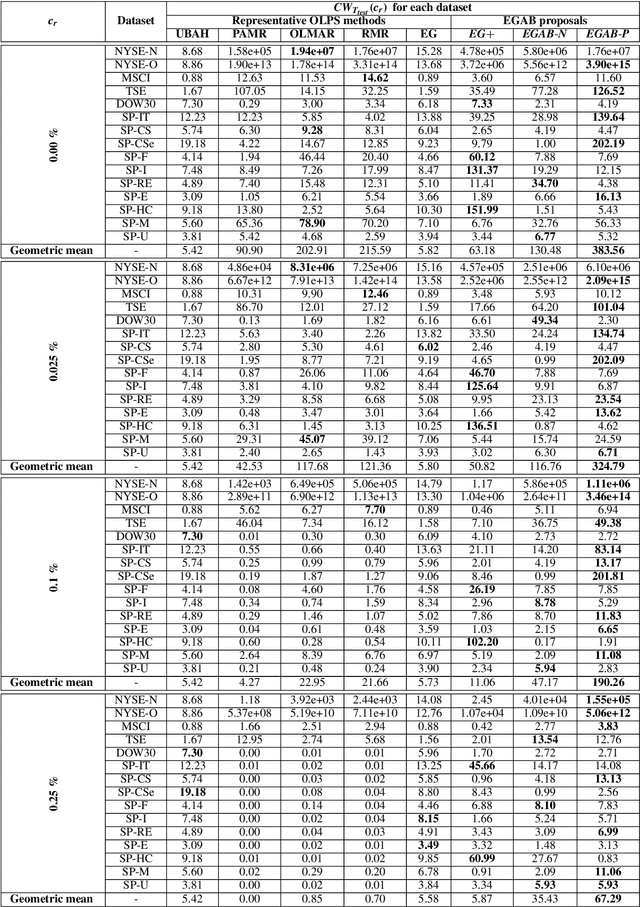

This paper introduces a novel family of generalized exponentiated gradient (EG) updates derived from an Alpha-Beta divergence regularization function. Collectively referred to as EGAB, the proposed updates belong to the category of multiplicative gradient algorithms for positive data and demonstrate considerable flexibility by controlling iteration behavior and performance through three hyperparameters: $\alpha$, $\beta$, and the learning rate $\eta$. To enforce a unit $l_1$ norm constraint for nonnegative weight vectors within generalized EGAB algorithms, we develop two slightly distinct approaches. One method exploits scale-invariant loss functions, while the other relies on gradient projections onto the feasible domain. As an illustration of their applicability, we evaluate the proposed updates in addressing the online portfolio selection problem (OLPS) using gradient-based methods. Here, they not only offer a unified perspective on the search directions of various OLPS algorithms (including the standard exponentiated gradient and diverse mean-reversion strategies), but also facilitate smooth interpolation and extension of these updates due to the flexibility in hyperparameter selection. Simulation results confirm that the adaptability of these generalized gradient updates can effectively enhance the performance for some portfolios, particularly in scenarios involving transaction costs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge