Generalized Dilation Neural Networks

Paper and Code

May 08, 2019

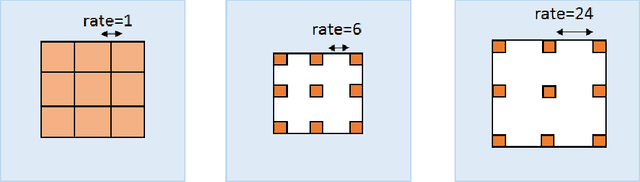

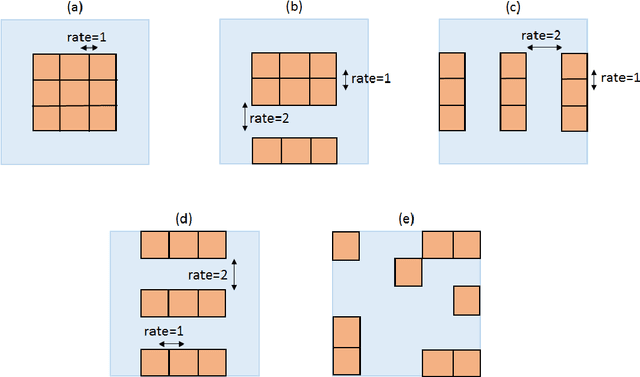

Vanilla convolutional neural networks are known to provide superior performance not only in image recognition tasks but also in natural language processing and time series analysis. One of the strengths of convolutional layers is the ability to learn features about spatial relations in the input domain using various parameterized convolutional kernels. However, in time series analysis learning such spatial relations is not necessarily required nor effective. In such cases, kernels which model temporal dependencies or kernels with broader spatial resolutions are recommended for more efficient training as proposed by dilation kernels. However, the dilation has to be fixed a priori which limits the flexibility of the kernels. We propose generalized dilation networks which generalize the initial dilations in two aspects. First we derive an end-to-end learnable architecture for dilation layers where also the dilation rate can be learned. Second we break up the strict dilation structure, in that we develop kernels operating independently in the input space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge