Generalised Image Outpainting with U-Transformer

Paper and Code

Jan 27, 2022

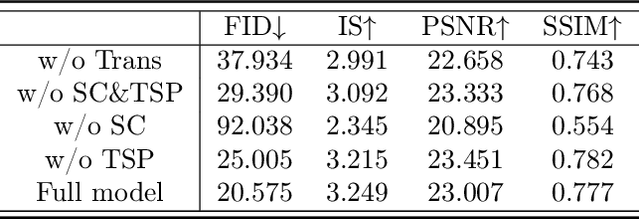

While most present image outpainting conducts horizontal extrapolation, we study the generalised image outpainting problem that extrapolates visual context all-side around a given image. To this end, we develop a novel transformer-based generative adversarial network called U-Transformer able to extend image borders with plausible structure and details even for complicated scenery images. Specifically, we design a generator as an encoder-to-decoder structure embedded with the popular Swin Transformer blocks. As such, our novel framework can better cope with image long-range dependencies which are crucially important for generalised image outpainting. We propose additionally a U-shaped structure and multi-view Temporal Spatial Predictor network to reinforce image self-reconstruction as well as unknown-part prediction smoothly and realistically. We experimentally demonstrate that our proposed method could produce visually appealing results for generalized image outpainting against the state-of-the-art image outpainting approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge