GaussianMotion: End-to-End Learning of Animatable Gaussian Avatars with Pose Guidance from Text

Paper and Code

Feb 17, 2025

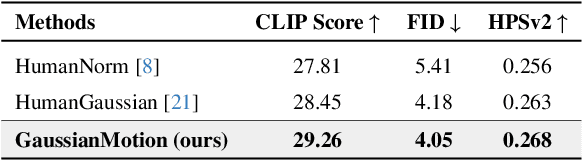

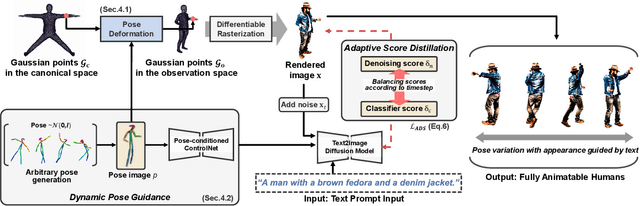

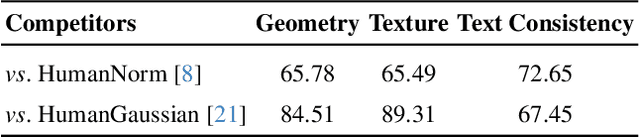

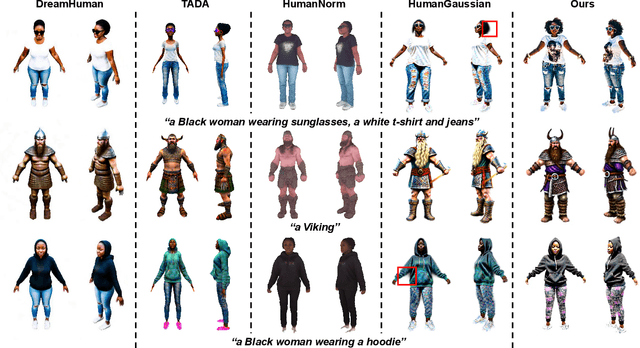

In this paper, we introduce GaussianMotion, a novel human rendering model that generates fully animatable scenes aligned with textual descriptions using Gaussian Splatting. Although existing methods achieve reasonable text-to-3D generation of human bodies using various 3D representations, they often face limitations in fidelity and efficiency, or primarily focus on static models with limited pose control. In contrast, our method generates fully animatable 3D avatars by combining deformable 3D Gaussian Splatting with text-to-3D score distillation, achieving high fidelity and efficient rendering for arbitrary poses. By densely generating diverse random poses during optimization, our deformable 3D human model learns to capture a wide range of natural motions distilled from a pose-conditioned diffusion model in an end-to-end manner. Furthermore, we propose Adaptive Score Distillation that effectively balances realistic detail and smoothness to achieve optimal 3D results. Experimental results demonstrate that our approach outperforms existing baselines by producing high-quality textures in both static and animated results, and by generating diverse 3D human models from various textual inputs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge