GADAM: Genetic-Evolutionary ADAM for Deep Neural Network Optimization

Paper and Code

May 19, 2018

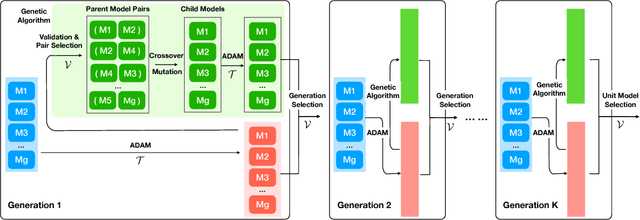

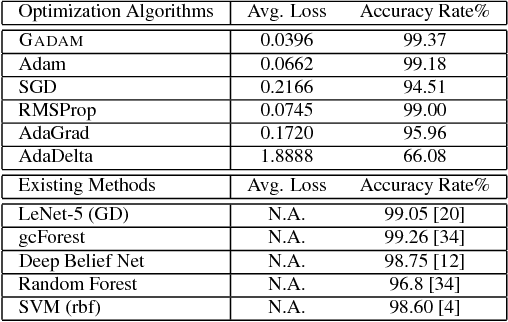

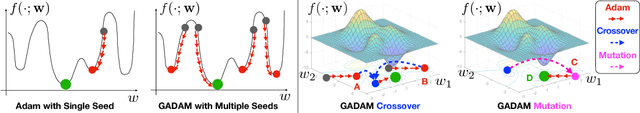

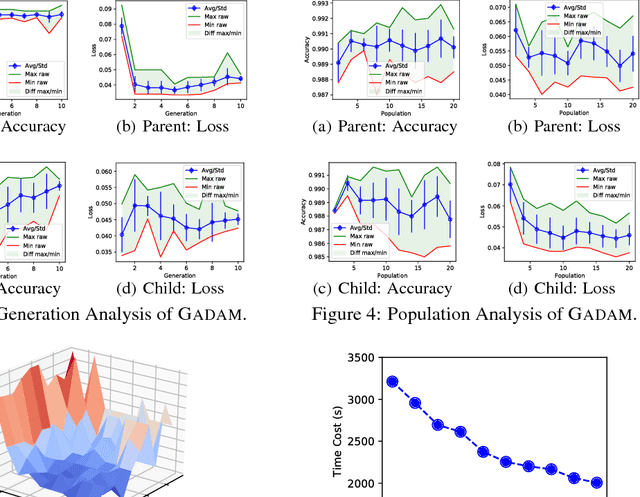

Deep neural network learning can be formulated as a non-convex optimization problem. Existing optimization algorithms, e.g., Adam, can learn the models fast, but may get stuck in local optima easily. In this paper, we introduce a novel optimization algorithm, namely GADAM (Genetic-Evolutionary Adam). GADAM learns deep neural network models based on a number of unit models generations by generations: it trains the unit models with Adam, and evolves them to the new generations with genetic algorithm. We will show that GADAM can effectively jump out of the local optima in the learning process to obtain better solutions, and prove that GADAM can also achieve a very fast convergence. Extensive experiments have been done on various benchmark datasets, and the learning results will demonstrate the effectiveness and efficiency of the GADAM algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge