FuCiTNet: Improving the generalization of deep learning networks by the fusion of learned class-inherent transformations

Paper and Code

May 17, 2020

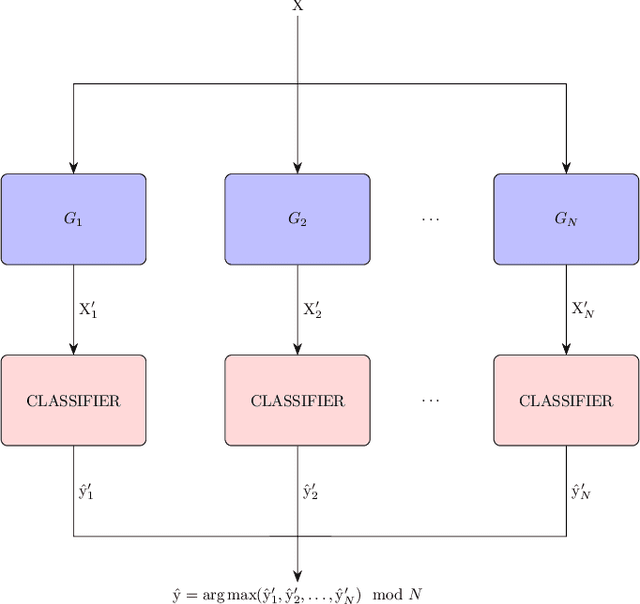

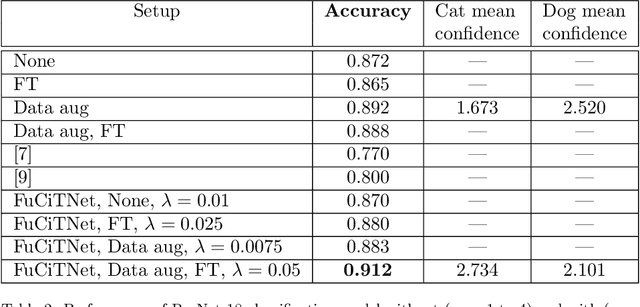

It is widely known that very small datasets produce overfitting in Deep Neural Networks (DNNs), i.e., the network becomes highly biased to the data it has been trained on. This issue is often alleviated using transfer learning, regularization techniques and/or data augmentation. This work presents a new approach, independent but complementary to the previous mentioned techniques, for improving the generalization of DNNs on very small datasets in which the involved classes share many visual features. The proposed methodology, called FuCiTNet (Fusion Class inherent Transformations Network), inspired by GANs, creates as many generators as classes in the problem. Each generator, $k$, learns the transformations that bring the input image into the k-class domain. We introduce a classification loss in the generators to drive the leaning of specific k-class transformations. Our experiments demonstrate that the proposed transformations improve the generalization of the classification model in three diverse datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge