Framelet Pooling Aided Deep Learning Network : The Method to Process High Dimensional Medical Data

Paper and Code

Jul 25, 2019

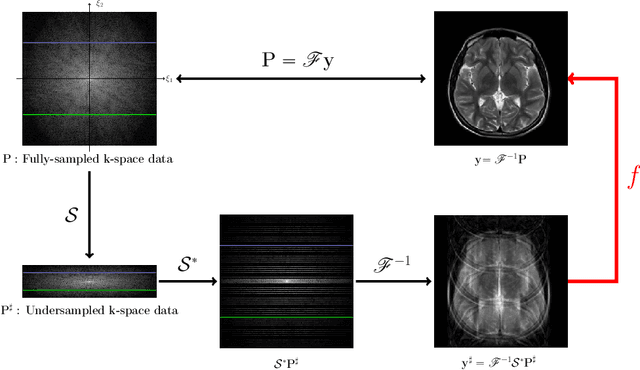

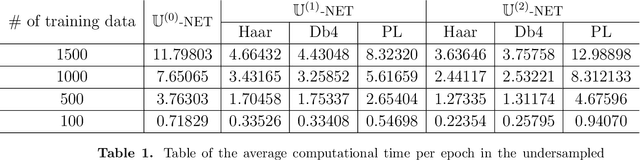

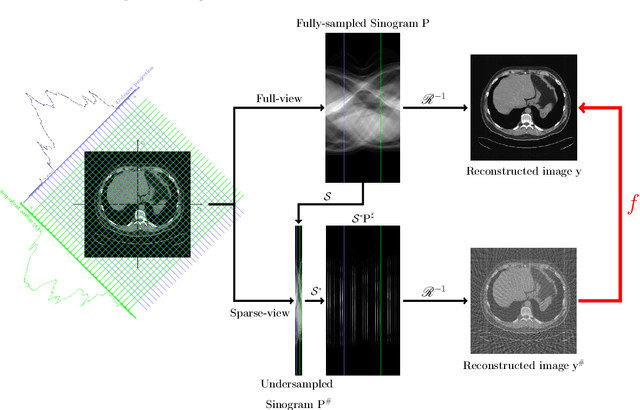

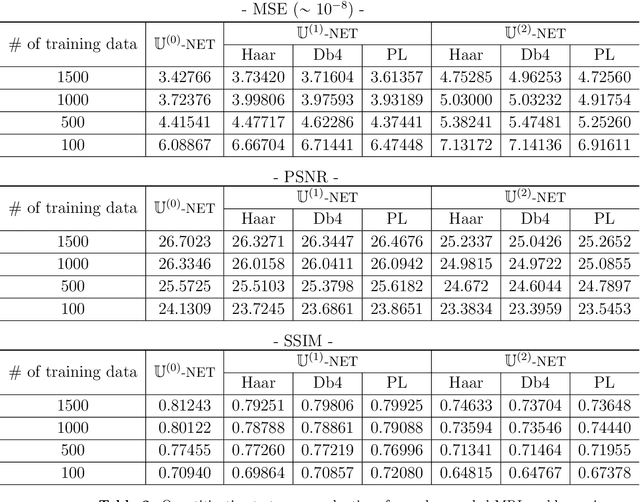

Machine learning-based analysis of medical images often faces several hurdles, such as the lack of training data, the curse of dimensionality problem, and the generalization issues. One of the main difficulties is that there exists computational cost problem in dealing with input data of large size matrices which represent medical images. The purpose of this paper is to introduce a framelet-pooling aided deep learning method for mitigating computational bundle, caused by large dimensionality. By transforming high dimensional data into low dimensional components by filter banks with preserving detailed information, the proposed method aims to reduce the complexity of the neural network and computational costs significantly during the learning process. Various experiments show that our method is comparable to the standard unreduced learning method, while reducing computational burdens by decomposing large-sized learning tasks into several small-scale learning tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge