Forward-Backward Knowledge Distillation for Continual Clustering

Paper and Code

May 29, 2024

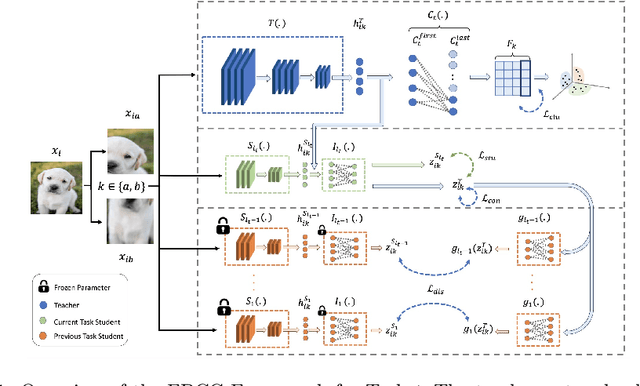

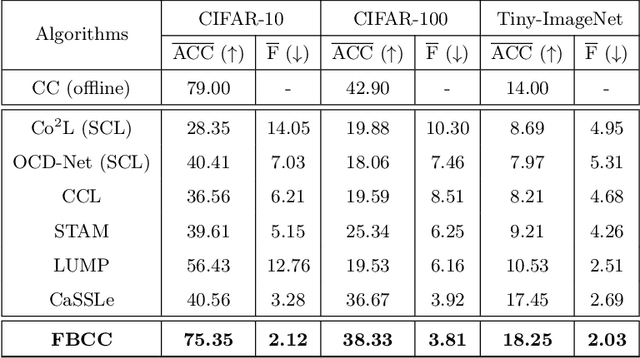

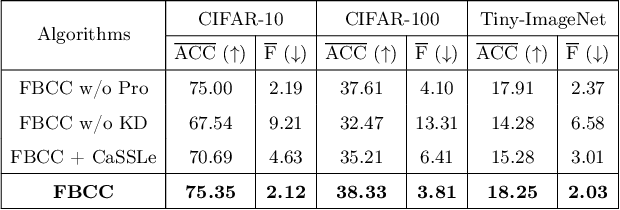

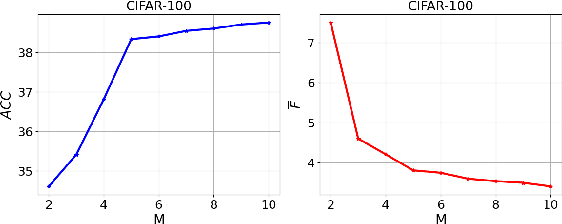

Unsupervised Continual Learning (UCL) is a burgeoning field in machine learning, focusing on enabling neural networks to sequentially learn tasks without explicit label information. Catastrophic Forgetting (CF), where models forget previously learned tasks upon learning new ones, poses a significant challenge in continual learning, especially in UCL, where labeled information of data is not accessible. CF mitigation strategies, such as knowledge distillation and replay buffers, often face memory inefficiency and privacy issues. Although current research in UCL has endeavored to refine data representations and address CF in streaming data contexts, there is a noticeable lack of algorithms specifically designed for unsupervised clustering. To fill this gap, in this paper, we introduce the concept of Unsupervised Continual Clustering (UCC). We propose Forward-Backward Knowledge Distillation for unsupervised Continual Clustering (FBCC) to counteract CF within the context of UCC. FBCC employs a single continual learner (the ``teacher'') with a cluster projector, along with multiple student models, to address the CF issue. The proposed method consists of two phases: Forward Knowledge Distillation, where the teacher learns new clusters while retaining knowledge from previous tasks with guidance from specialized student models, and Backward Knowledge Distillation, where a student model mimics the teacher's behavior to retain task-specific knowledge, aiding the teacher in subsequent tasks. FBCC marks a pioneering approach to UCC, demonstrating enhanced performance and memory efficiency in clustering across various tasks, outperforming the application of clustering algorithms to the latent space of state-of-the-art UCL algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge