Foreign Object Debris Detection for Airport Pavement Images based on Self-supervised Localization and Vision Transformer

Paper and Code

Oct 30, 2022

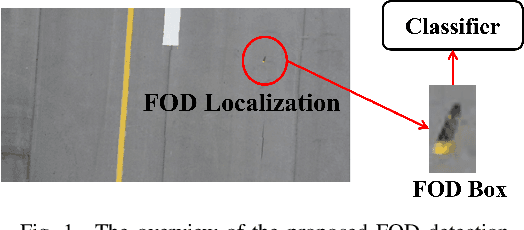

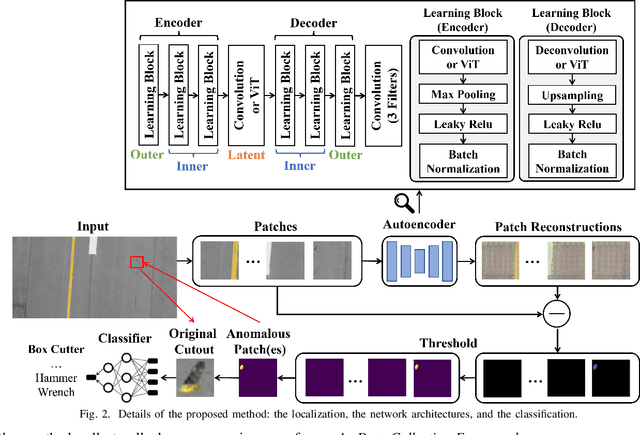

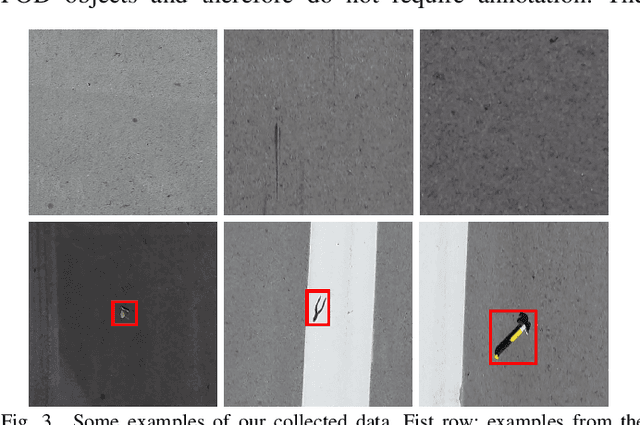

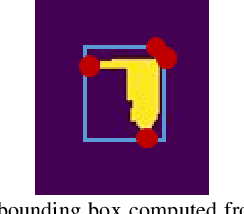

Supervised object detection methods provide subpar performance when applied to Foreign Object Debris (FOD) detection because FOD could be arbitrary objects according to the Federal Aviation Administration (FAA) specification. Current supervised object detection algorithms require datasets that contain annotated examples of every to-be-detected object. While a large and expensive dataset could be developed to include common FOD examples, it is infeasible to collect all possible FOD examples in the dataset representation because of the open-ended nature of FOD. Limitations of the dataset could cause FOD detection systems driven by those supervised algorithms to miss certain FOD, which can become dangerous to airport operations. To this end, this paper presents a self-supervised FOD localization by learning to predict the runway images, which avoids the enumeration of FOD annotation examples. The localization method utilizes the Vision Transformer (ViT) to improve localization performance. The experiments show that the method successfully detects arbitrary FOD in real-world runway situations. The paper also provides an extension to the localization result to perform classification; a feature that can be useful to downstream tasks. To train the localization, this paper also presents a simple and realistic dataset creation framework that only collects clean runway images. The training and testing data for this method are collected at a local airport using unmanned aircraft systems (UAS). Additionally, the developed dataset is provided for public use and further studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge