Forecasting Characteristic 3D Poses of Human Actions

Paper and Code

Nov 30, 2020

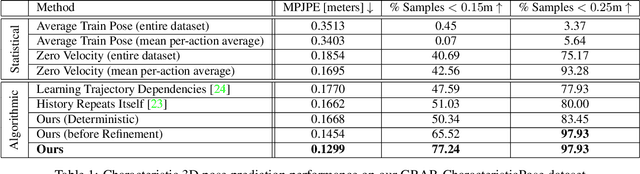

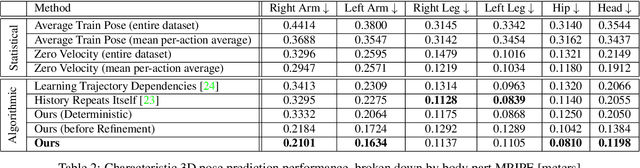

We propose the task of forecasting characteristic 3D poses: from a single pose observation of a person, to predict a future 3D pose of that person in a likely action-defining, characteristic pose - for instance, from observing a person picking up a banana, predict the pose of the person eating the banana. Prior work on human motion prediction estimates future poses at fixed time intervals. Although easy to define, this frame-by-frame formulation confounds temporal and intentional aspects of human action. Instead, we define a goal-directed pose prediction task that decouples pose prediction from time, taking inspiration from human, goal-directed behavior. To predict characteristic goal poses, we propose a probabilistic approach that first models the possible multi-modality in the distribution of possible characteristic poses. It then samples future pose hypotheses from the predicted distribution in an autoregressive fashion to model dependencies between joints and then optimizes the final pose with bone length and angle constraints. To evaluate our method, we construct a dataset of manually annotated single-frame observations and characteristic 3D poses. Our experiments with this dataset suggest that our proposed probabilistic approach outperforms state-of-the-art approaches by 22% on average.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge