FlowFusion: Dynamic Dense RGB-D SLAM Based on Optical Flow

Paper and Code

Mar 11, 2020

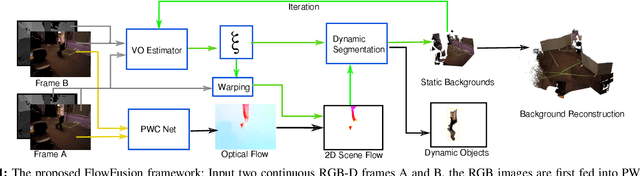

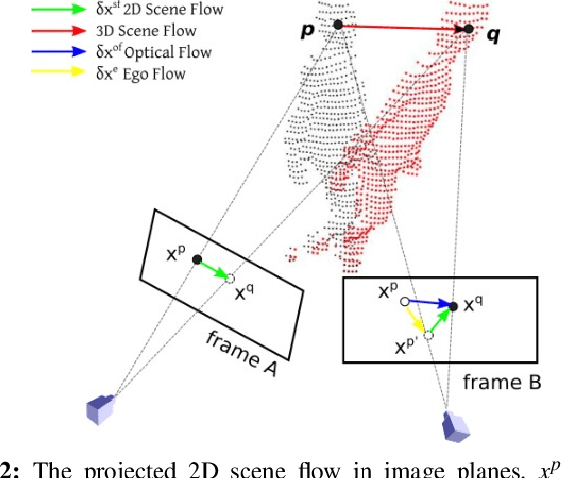

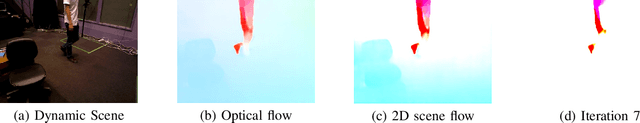

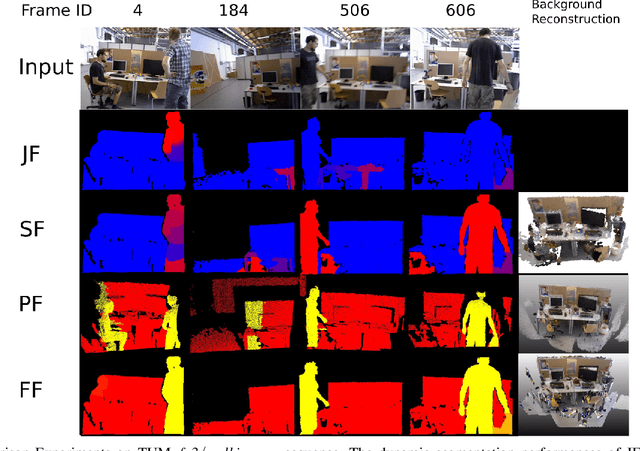

Dynamic environments are challenging for visual SLAM since the moving objects occlude the static environment features and lead to wrong camera motion estimation. In this paper, we present a novel dense RGB-D SLAM solution that simultaneously accomplishes the dynamic/static segmentation and camera ego-motion estimation as well as the static background reconstructions. Our novelty is using optical flow residuals to highlight the dynamic semantics in the RGB-D point clouds and provide more accurate and efficient dynamic/static segmentation for camera tracking and background reconstruction. The dense reconstruction results on public datasets and real dynamic scenes indicate that the proposed approach achieved accurate and efficient performances in both dynamic and static environments compared to state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge