Fixing Convergence of Gaussian Belief Propagation

Paper and Code

Jul 04, 2009

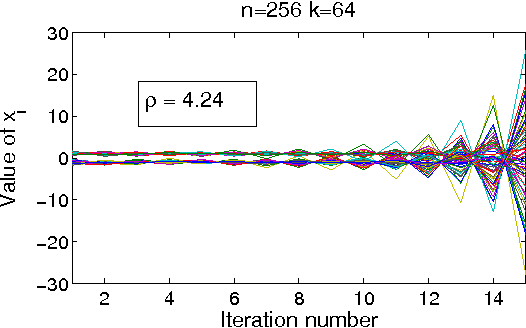

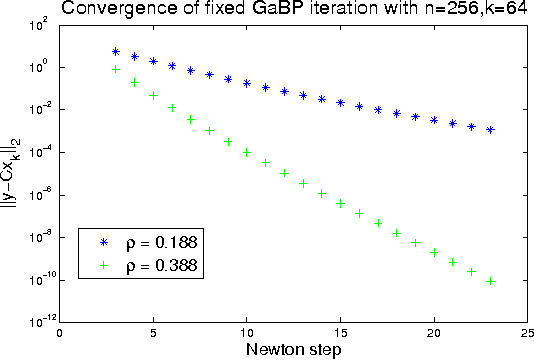

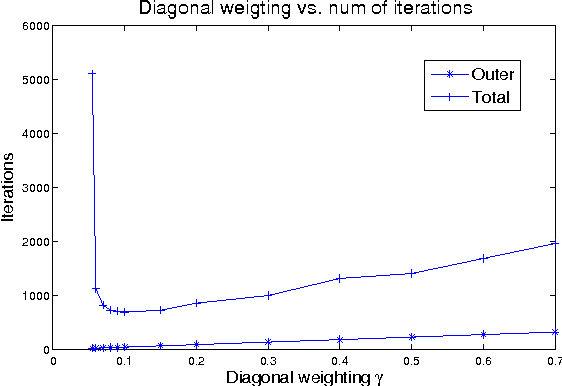

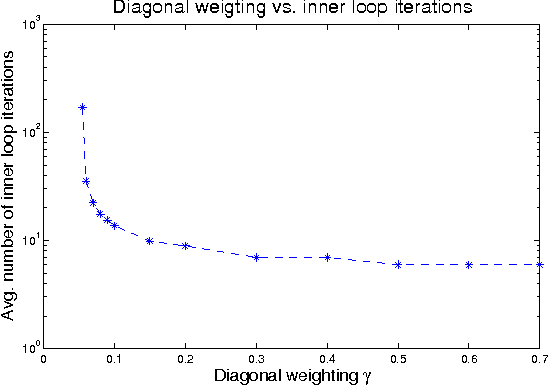

Gaussian belief propagation (GaBP) is an iterative message-passing algorithm for inference in Gaussian graphical models. It is known that when GaBP converges it converges to the correct MAP estimate of the Gaussian random vector and simple sufficient conditions for its convergence have been established. In this paper we develop a double-loop algorithm for forcing convergence of GaBP. Our method computes the correct MAP estimate even in cases where standard GaBP would not have converged. We further extend this construction to compute least-squares solutions of over-constrained linear systems. We believe that our construction has numerous applications, since the GaBP algorithm is linked to solution of linear systems of equations, which is a fundamental problem in computer science and engineering. As a case study, we discuss the linear detection problem. We show that using our new construction, we are able to force convergence of Montanari's linear detection algorithm, in cases where it would originally fail. As a consequence, we are able to increase significantly the number of users that can transmit concurrently.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge